Black Holes, Hawking Radiation, and the

Firewall (for CS229)

Noah Miller

December 26, 2018

Abstract

Here I give a friendly presentation of the the black hole informa-

tion problem and the firewall paradox for computer science people who

don’t know physics (but would like to). Most of the notes are just

requisite physics background. There are six sections. 1: Special Rela-

tivity. 2: General Relativity. 3: Quantum Field Theory. 4. Statistical

Mechanics 5: Hawking Radiation. 6: The Information Paradox.

Contents

1 Special Relativity 3

1.1 Causality and light cones . . . . . . . . . . . . . . . . . 3

1.2 Space-time interval . . . . . . . . . . . . . . . . . . . . 5

1.3 Penrose Diagrams . . . . . . . . . . . . . . . . . . . . . 7

2 General Relativity 10

2.1 The metric . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2 Geodesics . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.3 Einstein’s field equations . . . . . . . . . . . . . . . . . 13

2.4 The Schwarzschild metric . . . . . . . . . . . . . . . . . 15

2.5 Black Holes . . . . . . . . . . . . . . . . . . . . . . . . 16

2.6 Penrose Diagram for a Black Hole . . . . . . . . . . . . 18

2.7 Black Hole Evaporation . . . . . . . . . . . . . . . . . . 23

3 Quantum Field Theory 24

3.1 Quantum Mechanics . . . . . . . . . . . . . . . . . . . . 24

3.2 Quantum Field Theory vs Quantum Mechanics . . . . . 25

3.3 The Hilbert Space of QFT: Wavefunctionals . . . . . . . 26

3.4 Two Observables . . . . . . . . . . . . . . . . . . . . . . 27

1

3.5 The Hamiltonian . . . . . . . . . . . . . . . . . . . . . 29

3.6 The Ground State . . . . . . . . . . . . . . . . . . . . . 30

3.7 Particles . . . . . . . . . . . . . . . . . . . . . . . . . . 32

3.8 Entanglement properties of the ground state . . . . . . . 35

4 Statistical Mechanics 37

4.1 Entropy . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.2 Temperature and Equilibrium . . . . . . . . . . . . . . 40

4.3 The Partition Function . . . . . . . . . . . . . . . . . . 43

4.4 Free energy . . . . . . . . . . . . . . . . . . . . . . . . 49

4.5 Phase Transitions . . . . . . . . . . . . . . . . . . . . . 50

4.6 Example: Box of Gas . . . . . . . . . . . . . . . . . . . 52

4.7 Shannon Entropy . . . . . . . . . . . . . . . . . . . . . 53

4.8 Quantum Mechanics, Density Matrices . . . . . . . . . . 54

4.9 Example: Two state system . . . . . . . . . . . . . . . . 56

4.10 Entropy of Mixed States . . . . . . . . . . . . . . . . . 58

4.11 Classicality from environmental entanglement . . . . . . 58

4.12 The Quantum Partition Function . . . . . . . . . . . . . 62

5 Hawking Radiation 64

5.1 Quantum Field Theory in Curved Space-time . . . . . . 64

5.2 Hawking Radiation . . . . . . . . . . . . . . . . . . . . 65

5.3 The shrinking black hole . . . . . . . . . . . . . . . . . 66

5.4 Hawking Radiation is thermal . . . . . . . . . . . . . . 68

5.5 Partner Modes . . . . . . . . . . . . . . . . . . . . . . . 69

6 The Information Paradox 71

6.1 What should the entropy of a black hole be? . . . . . . 71

6.2 The Area Law . . . . . . . . . . . . . . . . . . . . . . . 72

6.3 Non unitary time evolution? . . . . . . . . . . . . . . . 73

6.4 No. Unitary time evolution! . . . . . . . . . . . . . . . . 73

6.5 Black Hole Complementarity . . . . . . . . . . . . . . . 75

6.6 The Firewall Paradox . . . . . . . . . . . . . . . . . . . 80

6.7 Harlow Hayden . . . . . . . . . . . . . . . . . . . . . . 85

2

1 Special Relativity

1.1 Causality and light cones

There are four dimensions: the three spatial dimensions and time.

Every “event” that happens takes place at a coordinate labelled by

(t, x, y, z).

However, it is difficult to picture things in four dimensions, so usually

when we draw pictures we just throw away the two extra spatial dimen-

sions, labelling points by

(t, x).

With this simplification, we can picture all points on the 2D plane.

Figure 1: space-time as a 2D plane.

If something moves with a velocity v, its “worldine” will just be given

by

x = vt. (1)

3

Figure 2: The worldline of something moving with velocity v.

A photon travels with velocity c. Physicists love to work in units

where c = 1. For example, the x axis could be measured in light years

and the t axis could be measured in years. In these units, the worldline

of a light particle always moves at a 45

◦

angle. (This is a very important

point!)

Because nothing can travel faster than light, a particle is always

constrained to move within its “lightcone.”

Figure 3: lightcone.

The “past light cone” consists of all of the space-time points that can

send a message that point. The “future light cone” consists of all of the

space-time points that can receive a message from that point.

4

1.2 Space-time interval

In special relativity, time passes slower for things that are moving. If

your friend were to pass you by in a very fast spaceship, you would see

their watch tick slower, their heartbeat thump slower, and their mind

process information slower.

If your friend is moving with velocity v, you will see their time pass

slower by a factor of

γ =

1

q

1 −

v

2

c

2

. (2)

For small v, γ ≈ 1. As v approaches c, γ shoots to infinity.

Let’s say your friend starts at a point (t

1

, x

1

) and moves at a constant

velocity to a point (t

2

, x

2

) at a constant velocity v.

Figure 4: A straight line between (t

1

, x

1

) and (t

2

, x

2

).

Define

∆t = t

2

− t

1

∆x = x

2

− x

1

.

From your perspective, your friend has moved forward in time by ∆t.

However, because time passes slower for your friend, their watch will

have only ticked forward the amount

∆τ ≡

∆t

γ

. (3)

Here, s is the so-called “proper time” that your friend experiences along

their journey from (t

1

, x

1

) to (t

2

, x

2

).

5

Everybody will agree on what ∆τ is. Sure, people using different

coordinate systems will not agree on the exact values of t

1

, x

1

, t

2

, x

2

,

or v. However, they will all agree on the value of ∆τ. This is because

∆τ is a physical quantity! We can just look at our friend’s watch and

see how much it ticked along its journey!

Figure 5: The time elapsed on your friend’s watch during their journey

is the invariant “proper time” of that space-time interval.

Usually, people like to write this in a different way, using v =

∆x

∆t

.

(∆τ)

2

=

(∆t)

2

γ

2

= (∆t)

2

(1 −

v

2

c

2

)

= (∆t)

2

−

1

c

2

(∆x)

2

This is very suggestive. It looks a lot like the expression

(∆x)

2

+ (∆y)

2

which gives an invariant notion of distance on the 2 dimensional plane.

By analogy, we will rename the proper time ∆τ the “invariant space-

time interval” between two points. It gives the “distance” between two

space-time points.

Note that if we choose two points for which ∆τ = 0, then those

points can only be connected by something traveling at the speed of

light. So points with a space-time distance ∆τ = 0 are 45

◦

away from

each other on a space-time diagram.

6

1.3 Penrose Diagrams

Penrose diagrams are used by physicists to study the “causal struc-

ture of space-time,” i.e., which points can affect (and be affected by)

other points. One difficult thing about our space-time diagrams is that

t and x range from −∞ to ∞. Therefore, it would be nice to reparam-

eterize them so that they have a finite range. This will allow us to look

at all of space-time on a finite piece of paper.

Doing this will severely distort our diagram and the distances be-

tween points. However, we don’t really care about the exact distances

between points. The fonly thing we care about preserving is 45

◦

angles.

We are happy to distort everything else.

To recap, a Penrose diagram is just a reparameterization of our usual

space-time diagram that

1. is “finite,” i.e. “compactified,” i.e. can be drawn on a page

2. distorts distances but perserves 45

◦

angles

3. lets us easily see how all space-time points are causally related.

So let’s reparameterize! Define new coordinates u and v by

u ± v = arctan(t ± x). (4)

As promised, u, v ∈ (−

π

2

,

π

2

). So now let’s draw our Penrose diagram!

Figure 6: The Penrose diagram for flat space.

7

Figure 7: Lines of constant t and constant x.

Let’s talk about a few features of the diagram. The bottom corner is

the “distant past.” All particles moving slower than c will emerge from

there. Likewise, he top corner is the “distant future,” where all particles

moving slower than c will end up. Even though each is just one point

in our picture, they really represent an infinite number of points.

Figure 8: The worldline of a massive particle.

The right corner and left corner are two points called “spacelike in-

8

fintiy.” Nothing physical ever comes out of those points.

The diagonal edges are called “lightlike infinty.” Photons emerge

from one diagonal, travel at a 45

◦

angle, and end up at another diagonal.

Figure 9: Worldlines of photons on our Penrose diagram.

From this point forward, we “set” c = 1 in all of our equations to

keep things simple.

9

2 General Relativity

2.1 The metric

Space-time is actually curved, much like the surface of the Earth.

However, locally, the Earth doesn’t look very curved. While it is not

clear how to measure large distances on a curved surface, there is no

trouble measuring distances on a tiny scale where things are basically

flat.

Figure 10: A curved surface is flat on tiny scales. Here, the distance,

A.K.A proper time, between nearby points is labelled dτ .

Say you have two points which are very close together on a curved

space-time, and an observer travels between the two at a constant ve-

locity. Say the two points are separated by the infinitesimal interval

dx

µ

= (dt, dx, dy, dz)

where µ = 0, 1, 2, 3.

In general we can write the proper time dτ elapsed on the observer’s

watch by

dτ

2

=

3

X

µ=0

3

X

ν=0

g

µν

dx

µ

dx

ν

. (5)

for some 16 numbers g

µν

.

Eq 5 might be puzzling to you, but it shouldn’t be. If anything, its

just a definition for g

µν

. If two nearby points have a tiny space-time

distance dτ, then dτ

2

necessarily has to be expressible in the above

form for two close points. There are no terms linear in dx

µ

because they

would not match the dimensionality of our tiny ds

2

(they would be “too

big”). There are no terms of order (dx

µ

)

3

because those are too small for

our consideration. Therefore, Eq. 5, by just being all possible quadratic

10

combinations of dx

µ

, is the most general possible form for a distance we

could have. I should note that Eq 5 could be written as

dτ

2

= a

T

Ma

where the vector a = dx

µ

and the 4 × 4 matrix M = g

µν

.

In general relativity, g

µν

is called the “metric.” It varies from point to

point. People always define it to be symmetric, i.e. g

µν

= g

νµ

, without

loss of generality.

The only difference between special relativity and general relativity

is that in special relativity we only think about the flat metric

dτ

2

= dt

2

− dx

2

− dy

2

− dz

2

(6)

where

g

µν

=

1 0 0 0

0 −1 0 0

0 0 −1 0

0 0 0 −1

. (7)

However, in general relativity, we are interested in dynamical metrics

which vary from point to point.

I should mention one further thing. Just because Eq. 5 says dτ

2

=

(something), that doesn’t mean that dτ

2

is the square of some quantity

“dτ.” This is because the metric g

µν

is not positive definite. We can see

that for two nearby points that are contained within each other’s light-

cones, dτ

2

> 0. However, if they are outside of each other’s lightcones,

then dτ

2

< 0, meaning dτ

2

is not the square of some dτ. If dτ

2

= 0,

the the points are on the “rim” of each other’s light cones.

While the metric gives us an infinitesimal notion of distance, we have

to integrate it in order to figure out a macroscopic notion of distance.

Say you have a path in space-time. The total “length’ of that path ∆τ

is just the integral of dτ along the path.

∆τ =

Z

dτ =

Z

s

X

µ,ν

g

µν

dx

µ

dx

ν

(8)

If an observer travels along that path, then s will be the proper

time they experience from the start of the path to the end of the path.

Remember that the proper time is still a physical quantity that all ob-

servers can agree on. Its just how much time elapses on the observer’s

watch.

11

Figure 11: The proper time ∆t along a path in space time gives the

elapsed proper time for a clock which follows that path.

2.2 Geodesics

Let’s think about 2D flat space-time again. Imagine all the paths

that start at (t

1

, x

1

) and end at (t

2

, x

2

). If we integrate dτ along this

path, we will get the proper time experienced by an observer travelling

along that path.

Figure 12: Each path from (t

1

, x

1

) to (t

2

, x

2

) has a different proper

time ∆τ =

R

dτ.

Remember that when things travel faster, time passes slower. The

more wiggly a path is, the faster that observer is travelling on average,

12

and the less proper time passes for them. The observer travelling on the

straight path experiences the most proper time of all.

Newton taught us that things move in straight lines if not acted on

by external forces. There is another way to understand this fact: things

move on paths that maximize their proper time when not acted on by

an external force.

This remains to be true in general relativity. Things like to move on

paths that maximize

∆τ =

Z

dτ.

Such paths are called “geodesics.” It takes an external force to make

things deviate from geodesics. Ignoring air resistance, a sky diver falling

to the earth is moving along a geodesic. However you, sitting in your

chair, are not moving along a geodesic because your chair is pushing up

on your bottom, providing an external force.

2.3 Einstein’s field equations

Space-time tells matter how to move; matter tells space-time

how to curve.

— John Wheeler

Einstein’s field equation tells you what the metric of space-time is

in the presence of matter. This is the equation that has made Einstein

truly immortal in the world of physics. It took him almost 10 years to

come up with it and almost died in the process.

R

µν

−

1

2

g

µν

R =

8πG

c

4

T

µν

(9)

Here, G is Newton’s gravitational constant and c is the speed of light.

g

µν

is the metric. R

µν

is something called the “Ricci curvature tensor.”

R is called the “scalar curvature.” Both R

µν

and R depend on g

µν

and

its derivatives in a very complicated way. T

µν

is something called the

“stress energy tensor.”

I will not explain all of the details, but hope to give you a heuristic

picture. First off, notice the free indicies µ and ν. Einstein’s equation is

actually 16 equations, one for each choice of µ of ν from 0 to 3. However,

because it is actually symmetric under the interchange of µ and ν, it is

only 10 independent equations. They are extremely non-linear partial

differtial equations.

13

The stress energy tensor T

µν

can be thought of as a shorthand for

the energy density in space. Wherever there is stuff, there is a non-zero

T

µν

. The exact form of T

µν

depends on what the “stuff” actually is.

More specifically, the different components of T

µν

correspond to dif-

ferent physical quantities.

Figure 13: Components of the T

µν

, taken from Wikipedia.

Roughly, Einstein’s equation can be understood as

something depending on curvature ≈ G × stuff density. (10)

This is what Wheeler meant by “matter tells space-time how to curve.”

Take the sun, for example. The sun is very massive, and therefore

space-time is very curved in the sun. Because the sun distorts space-

time, its radius is actually a few kilometers bigger than you would naively

expect from flat space. At the location of the sun there is an appreciable

T

µν

, and likewise a lot of curvature.

Once you get away from the physical location of the sun into the

vacuum of space, T

µν

= 0 and the curvature gradually dies off. This

curvature is what causes the Earth to orbit the sun. Locally, the Earth

is travelling in a straight line in space-time. But because space-time is

curved, the Earth’s path appears to be curved as well. This is what

Wheeler meant by “space-time tells matter how to move.”

14

Figure 14: T

µν

is large where there is stuff, and 0 in the vacuum of

space.

Notice, however, that the Earth itself also has some matter density,

so it curves space-time as well. The thing is that it curves space-time

a lot less than the sun does. If we want to solve for the motion of

the Earth, we pretend is doesn’t have any mass and just moves in the

fixed “background” metric created by the sun. However, this is only an

approximation.

2.4 The Schwarzschild metric

What if T

00

is infinite at one point (a delta function) and 0 every-

where else? What will the metric be then? We have to solve the Einstein

field equations to figure this out. (This is just a messy PDEs problem,

but its not so messy. For reference, it took me about 5 hours to do

it while following along with a book.) Thankfully, the answer is very

pretty. Setting c = 1,

dτ

2

=

1 −

2GM

r

dt

2

−

dr

2

1 −

2GM

r

− r

2

(dθ

2

+ sin

2

θdφ

2

). (11)

g

µν

=

1 −

2GM

r

0 0 0

0 −

1

1−

2GM

r

0 0

0 0 −r

2

0

0 0 0 −r

2

sin

2

θ

(12)

15

Here we are using the spherical coordinates

(t, r, θ, φ)

where r is the radial coordinate and θ and φ are the “polar” and “az-

imuthal” angles on the surface of a sphere, respectively.

This is the first metric that was found using Einstein’s equations. It

was derived by a German man named Karl Schwarzschild. He wanted to

figure out what the metric was for a non-rotating spherically symmetric

gravitating body of mass M, like the sun. Outside of the radius of the

sun, the Schwarzschild metric does give the correct form for the metric

there. Inside the sun, the metric needs to be modified and becomes more

complicated

Interestingly, the metric “blows up” at the origin r = 0. Karl

Schwarzschild just assumed that this wasn’t physical. Because a real

planet or star would need the metric to be modified inside of its vol-

ume, this singularity would not exist in those cases. He assumed that

the singularity would not be able to form in real life under any circum-

stances. Einstein himself was disturbed by the singularity, and made

a number of flawed arguments for why they can’t exist. We know now

that he wasn’t right, and that these singularities really do form in real

life inside of what we call “black holes.”

In one of the amazing coincidences of history, “Schwarz” means “black”

in German while “schild” means “shield.” It appears that Karl Schwarzschild

was always destined to discover black holes, even if he himself didn’t

know that.

2.5 Black Holes

Let’s see if we can get an intuitive feel for black holes just by looking

at the Schwarzschild metric. First, note that there is an interesting

length

r

s

= 2GM. (13)

This is the “Schwarzschild radius.” As I’m sure you heard, anything that

enters the Schwarzschild radius, A.K.A. the “event horizon,” cannot ever

escape. Why is that?

16

Note that at r = r

s

, the dt component of the metric becomes 0 and

the dr component becomes infinite. This particular singularity isn’t

“real.” It’s a “coordinate singularity.” There are other coordinates we

could use, like the Kruskal–Szekeres coordinates that do not have this

unattractive feature. We will ignore this.

The more important thing to note is that the dt and dr components

flip signs as r dips below 2GM. This is very significant. Remember that

the flat space metric is

dτ

2

= dt

2

− dx

2

− dy

2

− dz

2

. (14)

The only thing that distinguishes time and space is a sign in the metric!

This sign flips once you cross the event horizon.

Here is why this is important. Say that a massive particle moves a

tiny bit to a nearby space-time point which is separated from the original

point by dτ . If the particle is moving slower than c, then dτ

2

> 0.

However, inside of a black hole, as per Eq. 11, we can see that when

dτ

2

> 0, the particle must either be travelling into the center of the

black hole or away from it. This is just because 11 is of the form

dτ

2

=

(

(+)dt

2

+ (−)dr

2

+ (−)dθ

2

+ (−)dφ

2

if r > 2GM

(−)dt

2

+ (+)dr

2

+ (−)dθ

2

+ (−)dφ

2

if r < 2GM

were (+) denotes a positive quantity and (−) denotes a negative quan-

tity. In order that dτ

2

> 0, we much have dt

2

> 0 outside of the event

horizon but dr

2

> 0 inside the horizon, so dr cannot be 0.

Furthermore, if the particle started outside of the even horizon and

then went in, travelling with dr < 0 along its path, then by continuity

it has no choice but to keep travelling inside with dr < 0 until it hits

the singularity

The reason that a particle cannot “turn around” and leave the black

hole is the exact same reason why you cannot “turn around” and go back

in time. If you think about it, there is a similar “horizon” between you

and your childhood. You can never go back. If you wanted to go back

in time, at some point you would have to travel faster than the speed of

light (faster than 45

◦

).

The r coordinate becomes “time-like” behind the event horizon.

17

Figure 15: Going back in time requires going faster than c, which is

impossible.

Outside of a black hole, we are forced to continue aging and die, t

ever increasing. Inside of a black hole, we would be forced to hit the

singularity and die, r ever decreasing. Death is always gently guiding

us into the future.

Figure 16: Once you have passed the event horizon of a black hole, r

and t “flip,” so now going into the future means going further into the

black hole until you hit the singularity.

2.6 Penrose Diagram for a Black Hole

If we get rid of the angular θ and φ coordinates, our Schwarzschild

space-time only has two coordinates (t, r). Once again, we can cook up

new coordinates that allow us to draw a Penrose diagram. Here is the

result.

18

Figure 17: Penrose diagram of maximally extended space-time with

Schwarzschild metric.

There is a lot to unpack here. Let’s start with the right hand dia-

mond. This is space-time outside of the black hole, where everyone is

safe. The upper triangle is the interior of the black hole. Because the

boundary is a 45

◦

angle, once you enter you cannot leave. This is the

event horizon. The jagged line up top is the singularity that you are

destined to hit once you enter the black hole. From the perspective of

people outside the black hole, it takes an infinite amount of time for

something enter the black hole. It only enters at t = +∞

Figure 18: Penrose diagram of black hole with some lines of constant

r and t labelled.

19

Figure 19: Two worldlines in this space-time, one which enters the

black hole and one which does not.

I’m sure you noticed that there are two other parts to the diagram.

The bottom triangle is the interior of the “white hole” and the left hand

diamond is another universe! This other universe is invisible to the

Schwarzschild coordinates, and only appears once the coordinates are

“maximally extended.”

First let’s look at the white hole. There’s actually nothing too crazy

about it. If something inside the black hole is moving away from the

singularity (with dr > 0) it has no choice but to keep doing so until it

leaves the event horizon. So the stuff that starts in the bottom triangle

is the stuff that comes out of the black hole. (In this context, however,

we call it the white hole). It enters our universe at t = −∞. It is

impossible for someone on the outside to enter the white hole. If they

try, they will only enter the black hole instead. This is because the can’t

go faster than 45

◦

!

Figure 20: Stuff can come out of the white hole and enter our universe

at t = −∞.

20

Okay, now what the hell is up with this other universe? Its exactly

the same as our universe, but different. Note that two people in the

different universes can both enter the black hole and meet inside. How-

ever, they are both doomed to hit the singularity soon after. The two

universes have no way to communicate outside of the black hole.

Figure 21: People from parallel universes can meet inside the black

hole.

But wait! Hold the phone! Black holes exist in real life, right? Is

there a mirror universe on the other side of every black hole????

No. The Schwarzschild metric describes an “eternal black hole” that

has been there since the beginning of time and will be there until the

end of time. Real black holes are not like this. They form when stars

collapse. It is more complicated to figure out what the metric is if you

want to take stellar collapse into account, but it can be done. I will not

write the metric, but I will draw the Penrose diagram.

21

Figure 22: A Penrose diagram for a black hole that forms via stellar

collapse.

Because the black hole forms at a some finite time, there is no white

hole in our Penrose diagram. Likewise, there is no mirror universe.

Its interesting to turn the Penrose diagram upside down, which is

another valid solution to Einstein’s equations. This depicts a universe

in which a white hole has existed since the beginning of the universe. It

keeps spewing out material, getting smaller and smaller, until it disap-

pears at some finite time. No one can enter the white hole. If they try,

they will only see it spew material faster and faster as they get closer.

The white hole will dissolve right before their eyes. That is why they

can’t enter it.

Figure 23: The Penrose diagram for a white hole that exists for some

finite time.

22

2.7 Black Hole Evaporation

I have not mentioned anything about quantum field theory yet, but

I will give you a spoiler: black holes evaporate. This was discovered by

Stephen Hawking in 1975. They radiate energy in the form of very low

energy particles until they do not exist any more. This is a unique fea-

ture of what happens to black holes when you take quantum field theory

into account, and is very surprising. Having said that, this process is

extremely slow. A black hole with the mass of our sun would take 10

67

years to evaporate. Let’s take a look at the Penrose diagram for a black

hole which forms via stellar collapse and then evaporates.

Figure 24: The Penrose diagram for a black hole which forms via stellar

collapse and then evaporates.

23

3 Quantum Field Theory

While reading this section, forget I told you anything about general

relativity. This section only applies to flat Minkowski space and has

nothing to do with black holes.

3.1 Quantum Mechanics

Quantum mechanics is very simple. You only need two things. A

Hilbert space and Hamiltonian. Once you specify those two things, you

are done!

A Hilbert space H is just a complex vector space. States are elements

of the Hilbert space.

|ψi ∈ H. (15)

Our Hilbert space also has a positive definite Hermitian inner product.

hψ|ψi > 0 if |ψi 6= 0. (16)

A Hamiltonian

ˆ

H is just a linear map

ˆ

H = H → H (17)

that is self adjoint.

ˆ

H

†

=

ˆ

H (18)

States evolve in time according to the Schrödinger equation

d

dt

|ψi = −

i

~

ˆ

H |ψi. (19)

Therefore states evolve in time according to

U(t) |ψi ≡ exp

−

i

~

t

ˆ

H

|ψi. (20)

Because

ˆ

H is self adjoint, U(t) is unitary.

U(t)

†

= U(t)

−1

(21)

(Sometimes the Hamiltonian itself depends on time, i.e.

ˆ

H =

ˆ

H(t). In

these cases the situation isn’t so simple.)

I really want to drive this point into your head. Once you have a

Hilbert space H and a Hamiltonian

ˆ

H, you are DONE!

24

3.2 Quantum Field Theory vs Quantum Mechanics

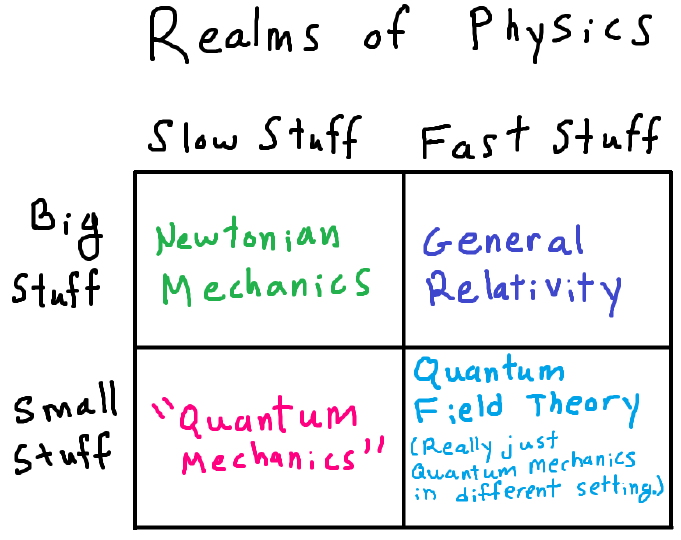

Different areas of physics need different theories to describe them.

People usually use the term “quantum mechanics” to describe things

that are small but moving very slow. This is the domain of chemistry.

However, once things travel very fast, there is enough energy to make

new particles and destroy old ones. This is the domain of quantum field

theory.

However, mathematically speaking, quantum field theory is just sub-

set of quantum mechanics. States in quantum field theory live in a

Hilbert space and evolve according to a Hamiltonian just like in quan-

tum mechanics.

I am going to be extremely ambitious here and literally just tell you

what this Hilbert space and Hamiltonian actually is for a very simple

quantum field theory. However, I will not describe to you the Hilbert

space of the actual quantum fields we see in real life like the photon

field, the electron field, etc. Actual particles have a confusing property

called “spin” which I don’t want to get into. I will instead tell you

about the quantum field theory of a fictitious “spin 0” particle that could

theoretically exist in real life but doesn’t appear to. Furthermore, this

particle will not “interact” with any other particle, making its analysis

particularly simple.

25

3.3 The Hilbert Space of QFT: Wavefunctionals

A classical field is a function φ(x) from space into R.

φ : R

3

→ R. (22)

We denote the space of smooth functions on R

3

by C

∞

(R

3

).

φ ∈ C

∞

(R

3

). (23)

Each particular φ is called a “classical field configuration.” Each value

φ(x) for some particular x is called a “field variable.”

Figure 25: A classical field assigns a real number to each point in

space. I have suppressed the three spatial dimensions into just one, x,

for simplicity.

Now I’m going to tell you what a quantum field state is. Are you

ready? A quantum field state is a functional from classical fields to

complex numbers.

Ψ : C

∞

(R

3

) → C (24)

H

QFT

= all such wave functionals (25)

These are called “wave functionals.” Let’s say you have two wave func-

tionals Ψ and Φ. The inner product is this infinite dimensional integral,

which integrates over all possible classical field configurations:

hΨ|Φi =

Z

Y

x∈R

3

dφ(x)

Ψ[φ]

∗

Φ[φ]

. (26)

Obviously, the product over x ∈ R

3

isn’t mathematically well defined.

However, there’s a whole branch of physics called “lattice field theory”

where people discretize space into lattices in order to compute things

26

on a super computer. Furthermore, physicists have many reasons to

believe that if we had a full theory of quantum gravity, we would realize

that quantum field theory as we know it does break down at very tiny

Planck-length sized distances. Most likely it would not be anything as

crude as a literal lattice, but something must be going on at really small

lengths. Anyway, because we don’t have a theory of quantum gravity,

this is the best we can do for now.

The physical interpretation is that if |Ψ[φ]|

2

is very big for a partic-

ular φ, then the quantum field is very likely to “be” in the classical field

configuration φ.

Note that we have a basis of wave functionals given by

Ψ

φ

0

[φ] ∝

(

1 if φ = φ

0

0 if φ 6= φ

0

(27)

for all φ

0

∈ C

∞

(R

3

). We can write them as

|Ψ

φ

0

i

(You should think of these as the i in |ii. Each classical field φ

0

labels

a “coordinate” of the QFT Hilbert space.) All other wave functionals

can be written as a linear combination of these wave functionals with

complex coefficients. However, this basis of the Hilbert space is physi-

cally useless. You would never ever see a quantum field state like these

in real life. (The reason is that they have infinite energy.) I will tell you

about a more useful basis for quantum field states a bit later.

3.4 Two Observables

An observable

ˆ

O is a linear map

ˆ

O : H → H (28)

that is self adjoint

ˆ

O

†

=

ˆ

O. (29)

Because it is self adjoint, all of its eigenvalues must be real numbers.

An eigenstate |ψi of

ˆ

O that satisfies

ˆ

O|ψi = λ |ψi (30)

for some λ ∈ R has the interpretation of having definite value λ under

the measurement corresponding to

ˆ

O.

27

There are two important sets of observables I have to tell you about

for these wave functionals. They are called

ˆ

φ(x) and ˆπ(x). (31)

There are an infinite number of them, one for each x. You should think

of the measurements

ˆ

φ(x) and ˆπ(x) as measurements occurring at the

point x in space. They are linear operators

ˆ

φ(x) : H

QFT

→ H

QFT

and ˆπ(x) : H

QFT

→ H

QFT

which are defined as follows.

ˆ

φ(x)Ψ

[φ] ≡ φ(x)Ψ[φ] (32)

ˆπ(x)Ψ

[φ] ≡

~

i

δ

δφ(x)

Ψ[φ] (33)

(Use the hats to help you!

ˆ

φ(x) is an operator acting on wave function-

als, while φ is the classical field configuration at which we are evaluating

our wave-functional. φ(x) is just the value of that input φ at x.)

First let’s talk about

ˆ

φ(x). It is the observable that “measures the

value of the field at x.” For example, the expected field value at x would

be

hΨ|

ˆ

φ(x) |Ψi =

Z

Y

x

0

∈R

3

dφ(x

0

)|Ψ[φ]|

2

φ(x).

Note that our previously defined Ψ

φ

0

are eigenstates of this operator.

For any φ

0

∈ C

∞

(R

3

), we have

ˆ

φ(x) |Ψ

φ

0

i = φ

0

(x) |Ψ

φ

0

i. (34)

The physical interpretation of ˆπ(x) is a bit obscure. First off, if you

don’t know,

δ

δφ(x)

(35)

is called the “functional derivative.” It is defined by

δφ(x)

δφ(y)

≡ δ

3

(x − y ). (36)

(δ

3

(x − y) is the three dimensional Dirac delta function. It satisfied

R

d

3

xf(x)δ

3

(x − y) = f(y) for any f : R

3

→ C, where d

3

x = dxdydz

28

is the three dimensional volume measure.) This is just the infinite di-

mensional version of the partial derivative in multivariable calculus.

∂x

i

∂x

j

= δ

ij

. (37)

Basically, ˆπ(x) measures the rate of change of the wave functional with

respect to one particular field variable φ(x). (The i is there to make it

self-adjoint.) I don’t want to get bogged down in its physical interpre-

tation.

3.5 The Hamiltonian

Okay, I’ve now told you about the Hilbert space, the inner prod-

uct, and a few select observables. Now I’m going to tell you what the

Hamiltonian is and then I’ll be done!

ˆ

H =

1

2

Z

d

3

x

ˆπ

2

+ (∇

ˆ

φ)

2

+ m

2

ˆ

φ

2

(38)

Done! (Here m is just a real number.)

(Now you might be wondering where I got this Hamiltonian from.

The beautiful thing is, I do not have to tell you! I am just telling

you the laws. Nobody truly knows where the laws of physics come

from. The best we can hope for is to know them, and then derive

their consequences. Now obviously I am being a bit cheeky, and there

are many desirable things about this Hamiltonian. But you shouldn’t

worry about that at this stage.)

I used some notation above that I have not defined. I am integrating

over all of space, so really I should have written ˆπ(x) and

ˆ

φ(x) but I

suppressed that dependence for aesthetics. Furthermore, that gradient

term needs to be written out explicitly.

(∇

ˆ

φ)

2

=

∂

x

ˆ

φ

2

+

∂

y

ˆ

φ

2

+

∂

z

ˆ

φ

2

where

∂

x

ˆ

φ(x, y, z) = lim

∆x→0

ˆ

φ(x + ∆x, y, z) −

ˆ

φ(x, y, z)

∆x

.

Let’s get an intuitive understanding for this Hamiltonian by looking at

it term by term.

The

ˆπ(x)

2

29

term means that a wavefunctional has a lot of energy if it changes quickly

when a particular field variable is varied.

For the other two terms, let’s imagine that our fields are well ap-

proximated by the state Ψ

φ

0

, i.e. it is one of those basis states we talked

about previously. This means it is “close” to being a “classical” field.

|Ψi ≈ |Ψ

φ

0

i. (39)

Then the

(∇

ˆ

φ)

2

term means that a wavefunctional has a lot of energy if φ

0

has a big

gradient. Similarly, the

m

2

ˆ

φ

2

term means the wave functional has a lot of energy if φ

0

is non-zero in

a lot of places.

3.6 The Ground State

I will now tell you what the lowest energy eigenstate of this Hamil-

tonian is. It is

Ψ

0

[φ] ∝ exp

−

1

2~

Z

d

3

k

p

k

2

+ m

2

|φ

k

|

2

(40)

where

φ

k

≡

Z

d

3

x

(2π)

3/2

φ(x)e

−ik·x

(41)

are the Fourier components (or “modes”) of the classical field config-

uration φ(x). Because φ is real, φ

∗

k

= φ

−k

. Note d

3

k is the three-

dimensional volume measure over k-space, and k

2

= |k|

2

. The bigger

|k| is, the higher the “frequency” of the Fourier mode is.

Let’s try and understand this wave functional qualitatively. It takes

its largest value when φ(x) = 0. The larger the Fourier components

of the classical field, the smaller Ψ

0

is. Therefore the wave functional

outputs very tiny number for classical fields that are far from 0. Fur-

thermore, because of the

√

k

2

+ m

2

term, the high frequency Fourier

components are penalized more heavily that the low frequency Fourier

components. Therefore, the wave functional Ψ

0

is very small for big

and jittery classical fields, and very large for small and gradually vary-

ing classical fields.

30

Figure 26: Some sample classical field configurations and the relative

size of Ψ

0

when evaluated at each one. The upper-left field maximizes

Ψ

0

because it is 0. The upper-right field is pretty close to 0, so Ψ

0

is

still pretty big. The lower-left field makes Ψ

0

small because it contains

large field values. The lower-right field makes Ψ

0

because its frequency

|k| is large even though the Fourier-coefficient is not that large.

First, let’s recall what we mean by ground state. Because |Ψ

0

i is an

energy eigenstate,

ˆ

H |Ψ

0

i = E

0

|Ψ

0

i (42)

for some energy E

0

. However, any other energy eigenstate will neces-

sarily have an eigenvalue that is bigger than E

0

.

Intuitively speaking, why is |Ψ

0

i the ground state? It’s because it

negotiates all of the competing interests of the terms in the Hamiltonian

to minimize it’s eigenvalue. Recall that there are three terms in the

Hamiltonian from Eq. 38. Let’s go through all three terms how see how

Ψ

0

tries to minimize each one.

1. The ˆπ

2

term doesn’t want the functional to vary too quickly as

the classical field input is changed. This is minimized because Ψ

0

varies like a Gaussian in terms of the Fourier components φ

k

.

2. The (∇

ˆ

φ)

2

term is minimized when likely classical field config-

urations have small gradients. This is minimized because of the

√

k

2

+ m

2

factor, which penalizes high-gradient jittery fields more

harshly than small-gradient gradually varying fields.

31

3. The m

2

φ

2

term wants likely classical field configurations to have

field values φ(x) close to 0. This is minimized by making Ψ

0

peak

around the classical field configuration φ(x) = 0.

Now that we have some appreciation for the ground state, I want to

rewrite it in a suggestive way:

Ψ

0

[φ] ∝ exp

−

1

2~

Z

d

3

k

p

k

2

+ m

2

|φ

k

|

2

∝

Y

k∈R

3

exp

−

1

2~

p

k

2

+ m

2

|φ

k

|

2

.

We can see that |Ψ

0

i “factorizes” nicely when written in terms of the

Fourier components φ

k

of the classical field input.

3.7 Particles

You ask, “Alright, great, I can see what a quantum field is. But what

does this have to do with particles?”

Great question. These wave functionals seem to have nothing to do

with particles. However, the particle states are hiding in these wave

functionals, somehow. It turns out that we can make wave functionals

that describe a state with a certain number of particles possessing some

specified momenta ~k. Here is how you do it:

Let’s say that for each k, there are n

k

particles present with momenta

~k. Schematically, the wavefunctionals corresponding to these states are

Ψ[φ] ∝

Y

k∈R

3

F

n

k

(φ

k

, φ

−k

) exp

−

1

2~

p

k

2

+ m

2

|φ

k

|

2

. (43)

for some set of functions F

n

k

.

However, people never really work in terms of these functions F

n

k

,

whatever they are. More commonly, states are written in terms of “oc-

cupation number” notation. We would the write state in Eq. 43 as

|Ψi = |n

k

1

, n

k

2

, n

k

3

, . . .i. (44)

These states are definite energy states because they are eigenstates of

the Hamiltonian.

ˆ

H |n

k

1

, n

k

2

, n

k

3

, . . .i =

E

0

+

X

k∈R

2

n

k

p

~

2

k

2

+ m

2

|n

k

1

, n

k

2

, n

k

3

, . . .i

(45)

32

(Remember that E

0

is the energy of the ground state |Ψ

0

i.) If you ever

took a class in special relativity, you would have learned that the energy

E of a particle with momentum ~p and mass m is equal to

E

2

= p

2

c

2

+ m

2

c

4

. (46)

That is exactly where that comes from! (Remember we set c = 1.)

This is exactly the energy for a collection of particles with mass m and

momentum ~k! The ground state is just the state when all n

k

= 0.

Not every state can be written in the form of Eq. 44. However,

every state can be written in terms of a linear combination of states

of that form. Therefore, we now have two different ways to understand

the Hilbert space of quantum field theory. On one hand, we can think

of them as wave functionals. On the other hand, we can think of them

in terms of particle occupation numbers. These are really two different

bases for the same Hilbert space.

There’s something I need to point out. These particle states I’ve

written are completely “delocalized” over all of space. These particles

do not exist at any particular location. They are infinite plane waves

spread out over the whole universe. This is because they are energy (and

momentum) eigenstates, meaning they have a well-defined energy. If we

wanted to “localize” these particles, we could make a linear combination

of particles of slightly different momenta in order to make a Gaussain

wave packet. This Gaussian wave packet would not have a perfectly well

defined energy or momentum, though. There would be some uncertainty

because it is a superposition of energy eigenstates.

So if we momentarily call |ki to be the state containing just one

particle with momentum k, then particle state which is a wavepacket of

momentum k

0

and frequency width σ could be written as

|k

0

i

Gaussian

∝

Z

d

3

k exp

−

(k−k

0

)

2

2σ

2

|ki.

I have included a picture of a wavepacket in the image below. However,

don’t forget that our QFT “wavepacket” is really a complicated wave

functional, and does not have any interpretation as a classical field.

33

Figure 27: A localized wave packet is the sum of completely delocalized

definite-frequency waves. Note that you can’t localize a wave packet into

a volume that isn’t at least a few times as big as its wavelength.

There’s four final things you might be wondering about particles.

Firstly, where are the “anti-particles” you’ve heard so much about? The

answer is that there are no anti-particles in the quantum field I’ve de-

scribed here. This is because the classical field configurations are func-

tions φ : R

3

→ R. If our classical fields were functions φ : R

3

→ C,

then we would find that there are two types of particles, one of which we

would call “anti particles.” Secondly, I should say that the particles I’ve

described are bosons. That means we can have as many particles as we

want with some momentum ~k. In other words, our occupation num-

bers can be any positive integer. A fermionic field is different. Fermionic

fields can only have occupation numbers of 0 or 1, so they are rather “dig-

ital” in that sense. Fermionic quantum field states therefore do not have

the nice wavefunctional interpretation that bosonic quantum fields have.

Thirdly, the particle we’ve constructed here has no spin, i.e. it is a “spin

0” particle. The sorts of particles we’re most used to, like electrons and

photons, are not of this type. They have spin

1

2

and spin 1, respectively.

Fourthly, where are the Feynman diagrams you’ve probably heard so

much about? Feynman diagrams are useful for describing particle in-

teractions. For example, an electron can emit or absorb a photon, so we

say the electron field interacts with the photon field. I have only told

you here about non-interacting particles, which is perfectly sufficient for

our purposes. Feynman diagrams are often used to compute “scattering

amplitudes.” For example, say I send in two electron wave packets into

each other with some momenta and relative angles, wait a while, and

then observe two electrons wave packets leaving with new momenta at

different relative angles. Physicists use Feynman diagrams as a tool in

34

order to calculate what the probability of such an event is.

3.8 Entanglement properties of the ground state

We have now looked out our Hilbert space H

QFT

in two different

bases: the wavefunctional basis and the particle basis. Both have their

strengths and weaknesses. However, I would like to bring up something

interesting. Thinking in terms of the wavefunctional basis, we can see

that H

QFT

can be decomposed into a tensor product of Hilbert spaces,

one for each position x in space.

H

QFT

=

O

x∈R

3

H

x

(47)

(Once again, we might imagine that our tensor product is not truly taken

over all of R

3

, but perhaps over a lattice of Planck-length spacing, for

all we know.) Each local Hilbert space H

x

is given by all normalizable

functions from R → C. Following mathematicians, we might call such

functions L

2

(R).

H

x

= L

2

(R)

Fixing x, each state in H

x

simply assigns a complex number to each

possible classical value of φ(x). Once we tensor together all H

x

, we

recover our space of field wave functionals. The question I now ask you

is: what are the position-space entanglement properties of the ground

state?

Let’s back up a bit and remind ourselves what the ground state

again. We wrote it in terms of the Fourier components:

Ψ

0

[φ] ∝ exp

−

1

2~

Z

d

3

k

p

k

2

+ m

2

|φ

k

|

2

φ

k

≡

Z

d

3

x

(2π)

3/2

φ(x)e

−ik·x

We can plug in the bottom expression into the top expression to express

Ψ

0

[φ] in terms of the position space classical field φ(x).

Ψ

0

[φ] ∝ exp

−

1

2~

ZZZ

d

3

k

d

3

xd

3

y

(2π)

3

e

−ik·(x−y)

p

k

2

+ m

2

φ(x)φ(y)

∝ exp

−

1

2~

ZZ

d

3

xd

3

y

(2π)

3

f(|x − y|)φ(x)φ(y)

35

One could in principle perform the k integral to compute f(|x − y|),

although I won’t do that here. (There’s actually a bit of funny business

you have to do, introducing a “regulator” to make the integral converge.)

The important thing to note is that the values of the field variables φ(x)

and φ(y) are entangled together by f(|x −y |), and the wave functional

Ψ

0

does not factorize nicely in position space the way it did in Fourier

space. The bigger f(|x−y|) is, the larger the entanglement between H

x

and H

y

is. We can see that in the ground state, the value of the field at

one point is quite entangled with the field at other points. Indeed, there

is a lot of short-range entanglement all throughout the universe. How-

ever, it turns out that f(|x −y|) becomes very small at large distances.

Therefore, nearby field variables are highly entangled, while distant field

variables are not very entangled.

This is not such a mysterious property. If your quantum field is in

the ground state, and you measure the value of the field at some x to

be φ(x) then all this means is that nearby field values are likely to also

be close to φ(x). This is just because the ground state wave functional

is biggest for classical fields that vary slowly in space.

You might wonder if this entanglement somehow violates causality.

Long story short, it doesn’t. This entanglement can’t be used to send

information faster than light. (However, it does have some unintuitive

consequences, such as the Reeh–Schlieder theorem.)

Let me wrap this up by saying what this has to do with the Firewall

paradox. Remember, in this section we have only discussed QFT in flat

space! However, while the space-time at the horizon of a black hole is

curved, it isn’t curved that much. Locally, it looks pretty flat. There-

fore, one would expect for quantum fields in the vicinity of the horizon

to behave much like they would in flat space. This means that low en-

ergy quantum field states will still have a strong amount of short-range

entanglement because short-range entanglement lowers the energy of the

state. (This is because of the (∇

ˆ

φ)

2

term in the Hamiltonian.) However,

the Firewall paradox uses the existence of this entanglement across the

horizon to make a contradiction. One resolution to the contradiction is

to say that there’s absolutely no entanglement across the horizon what-

soever. This would mean that there is an infinite energy density at the

horizon, contradicting the assumption that nothing particularly special

happens there.

36

4 Statistical Mechanics

4.1 Entropy

Statistical Mechanics is a branch of physics that pervades all other

branches. Statistical mechanics is relevant to Newtonian mechanics,

relativity, quantum mechanics, and quantum field theory.

Figure 28: Statistical mechanics applies to all realms of physics.

Its exact incarnation is a little different in each quadrant, but the

basic details are identical.

The most important quantity in statistical mechanics is called “en-

tropy,” which we label by S. People sometimes say that entropy is a

measure of the “disorder” of a system, but I don’t think this a good way

to think about it. But before we define entropy, we need to discuss two

different notions of state: “microstates” and “macrostates.”

In physics, we like to describe the real world as mathematical objects.

In classical physics, states are points in a “phase space.” Say for example

you had N particles moving around in 3 dimensions. It would take 6N

real numbers to specify the physical state of this system at a given

instant: 3 numbers for each particle’s position and 3 numbers for each

particle’s momentum. The phase space for this system would therefore

just be R

6N

.

(x

1

, y

1

, z

1

, p

x

1

, p

y

1

, p

z

1

, . . . x

N

, y

N

, z

N

, p

x

N

, p

y

N

, p

z

N

) ∈ R

6N

(In quantum mechanics, states are vectors in a Hilbert space H instead

of points in a phase space. We’ll return to the quantum case a bit later.)

37

A “microstate” is a state of the above form. It contains absolutely

all the physical information that an omniscent observer could know. If

you were to know the exact microstate of a system and knew all of the

laws of physics, you could in principle deduce what the microstate will

be at all future times and what the microstate was at all past times.

However, practically speaking, we can never know the true microstate

of a system. For example, you could never know the positions and mo-

menta of every damn particle in a box of gas. The only things we can

actually measure are macroscopic variables such as internal energy, vol-

ume, and particle number (U, V, N). A “macrostate” is just a set of

microstates. For examples, the “macrostate” of a box of gas labelled by

(U, V, N) would be the set of all microstates with energy U, volume V ,

and particle number N. The idea is that if you know what macrostate

your system is in, you know that your system is equally likely to truly

be in any of the microstates it contains.

Figure 29: You may know the macrostate, but only God knows the

microstate.

I am now ready to define what entropy is. Entropy is a quantity asso-

38

ciated with a macrostate. If a macrostate is just a set of Ω microstates,

then the entropy S of the system is

S ≡ k log Ω. (48)

Here, k is Boltzmann’s constant. It is a physical constant with units of

energy / temperature.

k ≡ 1.38065 × 10

−23

Joules

Kelvin

(49)

The only reason that we need k to define S is because the human race

defined units of temperature before they defined entropy. (We’ll see

how temperature factors into any of this soon.) Otherwise, we probably

would have set k = 1 and temperature would have the same units as

energy.

You might be wondering how we actually count Ω. As you probably

noticed, the phase space R

6N

is not discrete. In that situation, we

integrate over a phase space volume with the measure

d

3

x

1

d

3

p

1

. . . d

3

x

N

d

3

p

N

.

However, this isn’t completely satisfactory because position and mo-

mentum are dimensionful quantities while Ω should be a dimensionless

number. We should therefore divide by a constant with units of posi-

tion times momentum. Notice, however, that because S only depends

on log Ω, any constant rescaling of Ω will only alter S by a constant and

will therefore never affect the change in entropy ∆S of some process. So

while we have to divide by a constant, whichever constant we divide by

doesn’t affect the physics.

Anyway, even though we are free to choose whatever dimensionful

constant we want, the “best” is actually Planck’s constant h! Therefore,

for a classical macrostate that occupies a phase space volume Vol,

Ω =

1

N!

1

h

3N

Z

Vol

N

Y

i=1

d

3

x

i

d

3

p

i

. (50)

(The prefactor 1/N! is necessary if all N particles are indistinguishable.

It is the cause of some philosophical consternation but I don’t want to

get into any of that.)

Let me now explain why I think saying entropy is “disorder” is not

such a good idea. Different observers might describe reality with differ-

ent macrostates. For example, say your room is very messy and disor-

ganized. This isn’t a problem for you, because you spend a lot of time

39

in there and know where everything is. Therefore, the macrostate you

use to describe your room contains very few microstates and has a small

entropy. However, according to your mother who has not studied your

room very carefully, the entropy of your room is very large. The point

is that while everyone might agree your room is messy, the entropy of

your room really depends on how little you know about it.

4.2 Temperature and Equilibrium

Let’s say we label our macrostates by their total internal energy

U and some other macroscopic variables like V and N. (Obviously,

these other macroscopic variables V and N can be replaced by different

quantities in different situations, but let’s just stick with this for now.)

Our entropy S depends on all of these variables.

S = S(U, V, N) (51)

The temperature T of the (U, V, N) macrostate is then be defined to be

1

T

≡

∂S

∂U

V,N

. (52)

The partial derivative above means that we just differentiate S(U, V, N)

with respect to U while keeping V and N fixed.

If your system has a high temperature and you add a bit of energy

dU to it, then the entropy S will not change much. If your system has a

small temperature and you add a bit of energy, the entropy will increase

a lot.

Next, say you have two systems A and B which are free to trade

energy back and forth.

Figure 30: Two systems A and B trading energy. U

A

+ U

B

is fixed.

40

Say system A could be in one of Ω

A

possible microstates and system

B could be in Ω

B

possible microstates. Therefore, the total AB system

could be in Ω

A

Ω

B

possible microstates. Therefore, the entropy S

AB

of

both systems combined is just the sum of entropies of both sub-systems.

S

AB

= k log(Ω

A

Ω

B

) = k log Ω

A

+ k log Ω

B

= S

A

+ S

B

(53)

The crucial realization of statistical mechanics is that, all else being

equal, a system is most likely to find itself in a macrostate corresponding

to the largest number of microstates. This is the so-called “Second law

of thermodynamics”: for all practical intents and purposes, the entropy

of a closed system always increases over time. It is not really a physical

“law” in the regular sense, it is more like a profound realization.

Therefore, the entropy S

AB

of our joint AB system will increase as

time goes on until it reaches its maximum possible value. In other words,

A and B trade energy in a seemingly random fashion that increases S

AB

on average. When S

AB

is finally maximized, we say that our systems

are in “thermal equilibrium.”

Figure 31: S

AB

is maximized when U

A

has some particular value.

(It should be noted that there will actually be tiny random "thermal"

fluctuations around this maximum.)

Let’s say that the internal energy of system A is U

A

and the internal

energy of system B is U

B

. Crucially, note that the total energy of

combined system

U

AB

= U

A

+ U

B

is constant over time! This is because energy of the total system is

conserved. Therefore,

dU

A

= −dU

B

.

41

Now, the combined system will maximize its entropy when U

A

and U

B

have some particular values. Knowing the value of U

A

is enough though,

because U

B

= U

AB

− U

A

. Therefore, entropy is maximized when

0 =

∂S

AB

∂U

A

. (54)

However, we can rewrite this as

0 =

∂S

AB

∂U

A

=

∂S

A

∂U

A

+

∂S

B

∂U

A

=

∂S

A

∂U

A

−

∂S

B

∂U

B

=

1

T

A

−

1

T

B

.

Therefore, our two systems are in equilibrium if they have the same

temperature!

T

A

= T

B

(55)

If there are other macroscopic variables we are using to define our

macrostates, like volume V or particle number N, then there will be

other quantities that must be equal in equibrium, assuming our two sys-

tems compete for volume or trade particles back and forth. In these

cases, we define the quantities P and µ to be

P

T

≡

∂S

∂V

U,N

µ

T

≡ −

∂S

∂N

U,V

. (56)

P is called “pressure” and µ is called “chemical potential.” In equilib-

rium, we would also have

P

A

= P

B

µ

A

= µ

B

. (57)

(You might object that pressure has another definition, namely force di-

vided by area. It would be incumbent on us to check that this definition

matches that definition in the relevant situation where both definitions

have meaning. Thankfully it does.)

42

4.3 The Partition Function

Figure 32: If you want to do statistical mechanics, you really should

know about the partition function.

Explicitly calculating Ω for a given macrostate is usually very hard.

Practically speaking, it can only be done for simple systems you under-

stand very well. However, physicists have developed an extremely pow-

erful way of doing statistical mechanics even for complicated systems.

It turns out that there is a function of temperature called the “partition

function” that contains all the information you’d care to know about

your macrostate when you are working in the “thermodynamic limit.”

This function is denoted Z(T ). Once you compute Z(T ) (which is usu-

ally much easier than computing Ω) it is a simple matter to extract the

relevant physics.

Before defining the partition function, I would like to talk a bit about

heat baths. Say you have some system S in a very large environment E.

Say you can measure the macroscopic variables of S, including its energy

E at any given moment. (We use E here to denotes energy instead of

U when talking about the partition function.) The question I ask is: if

the total system has a temperature T , what’s the probability that S has

some particular energy E?

43

Figure 33: A large environment E and system S have a fixed total en-

ergy E

tot

. E is called a “heat bath” because it is very big. The combined

system has a temperature T .

We should be picturing that S and E are evolving in some compli-

cated way we can’t understand. However, their total energy

E

tot

= E + E

E

(58)

is conserved. We now define

Ω

S

(E) ≡ num. microstates of S with energy E (59)

Ω

E

(E

E

) ≡ num. microstates of E with energy E

E

.

Therefore, the probability that S has some energy E is proportional

to the number of microstates where S has energy E and E has energy

E

tot

− E.

Prob(E) ∝ Ω

S

(E)Ω

E

(E

tot

− E) (60)

Here is the important part. Say that our heat bath has a lot of energy:

E

tot

E. As far as the heat bath is concerned, E is a very small

amount of energy. Therefore,

Ω

E

(E

tot

− E) = exp

1

k

S

E

(E

tot

− E)

≈ exp

1

k

S

E

(E

tot

) −

E

kT

by Taylor expanding S

E

in E and using the definition of temperature.

We now have

Prob(E) ∝ Ω

S

(E) exp

−

E

kT

.

44

Ω

S

(E) is sometimes called the “degeneracy” of E. In any case, we can

easily see what the ratio of Prob(E

1

) and Prob(E

2

) must be.

Prob(E

1

)

Prob(E

2

)

=

Ω

S

(E

1

)e

−E

1

/kT

Ω

S

(E

2

)e

−E

2

/kT

Furthermore, we can use the fact that all probabilities must sum to 1 in

order to calculate the absolute probability. We define

Z(T ) ≡

X

E

Ω

S

(E)e

−E/kT

(61)

=

X

s

e

−E

s

/kT

where

P

s

is a sum over all states of S. Finally, we have

Prob(E) =

Ω

S

(E)e

−E/kT

Z(T )

(62)

However, more than being a mere proportionality factor, Z(T ) takes

on a life of its own, so it is given the special name of the “partition

function.” Interestingly, Z(T ) is a function that depends on T and

not E. It is not a function that has anything to do with a particular

macrostate. Rather, it is a function that has to with every microstate

at some temperature. Oftentimes, we also define

β ≡

1

kT

and write

Z(β) =

X

s

e

−βE

s

. (63)

The partition function Z(β) has many amazing properties. For one,

it can be used to write an endless number of clever identities. Here is

one. Say you want to compute the expected energy hEi your system

has at temperature T .

hEi =

X

s

E

s

Prob(E

s

)

=

P

s

E

s

e

−βE

s

Z(β)

= −

1

Z

∂

∂β

Z

= −

∂

∂β

log Z

45

This expresses the expected energy hEi as a function of temperature.

(We could also calculate hE

n

i for any n if we wanted to.)

Where the partition function really shines is in the “thermodynamic

limit.” Usually, people define the thermodynamic limit as

N → ∞ (thermodynamic limit) (64)

where N is the number of particles. However, sometimes you might

be interested in more abstract systems like a spin chain (the so-called

“Ising model”) or something else. There are no “particles” in such a

system, however there is still something you would justifiably call the

thermodynamic limit. This would be when the number of sites in your

spin chain becomes very large. So N should really just be thought of

as the number of variables you need to specify a microstate. When

someone is “working in the thermodynamic limit,” it just means that

they are considering very “big” systems.

Of course, in real life N is never infinite. However, I think we can

all agree that 10

23

is close enough to infinity for all practical purposes.

Whenever an equation is true “in the thermodynamic limit,” you can

imagine that there are extra terms of order

1

N

unwritten in your equation

and laugh at them.

What is special about the thermodynamic limit is that Ω

S

becomes,

like, really big...

Ω

S

= (something)

N

Furthermore, the entropy and energy will scale with N

S

S

= NS

1

E = NE

1

In the above equation, S

1

and E

1

can be thought of as the average

amount of entropy per particle.

Therefore, we can rewrite

Prob(E) ∝ Ω

S

(E) exp

−

1

kT

E

= exp

1

k

S

S

−

1

kT

E

= exp

N

1

k

S

1

−

1

kT

E

1

.

The thing to really gawk at in the above equation is that the probability

that S has some energy E is given by

Prob(E) ∝ e

N(...)

.

I want you to appreciate how insanely big e

N(...)

is in the thermody-

namic limit. Furthermore, if there is even a miniscule change in (. . .),

46

Prob(E) will change radically. Therefore, Prob(E) will be extremely

concentrated at some particular energy, and deviating slightly from that

maximum will cause Prob(E) to plummit.

Figure 34: In the thermodynamic limit, the system S will have a well

defined energy.

We can therefore see that if the energy U maximizes Prob(E), we

will essentially have

Prob(E) ≈

(

1 if E = U

0 if E 6= U

.

Let’s now think back to our previously derived equation

hEi = −

∂

∂β

log Z(β).

Recall that hEi is the expected energy of S when it is coupled to a heat

bath at some temperature. The beauty is that in the thermodynamic

limit where our system S becomes very large, we don’t even have to

think about the heat bath anymore! Our system S is basically just in

the macrostate where all microstates with energy U are equally likely.

Therefore,

hEi = U (thermodynamic limit)

and

U = −

∂

∂β

log Z(β) (65)

is an exact equation in the thermodynamic limit.

47

Let’s just appreciate this for a second. Our original definition of

S(U) was

S(U) = k log(Ω(U))

and our original definition of temperature was

1

T

=

∂S

∂U

.

In other words, T is a function of U. However, we totally reversed logic

when we coupled our system to a larger environment. We no longer

knew what the exact energy of our system was. I am now telling you

that instead of calculating T as a function of U, when N is large we are

actually able to calculate U as a function of T ! Therefore, instead of

having to calculate Ω(U), we can just calculate Z(T ) instead.

I should stress, however, that Z(T ) is still a perfectly worthwhile

thing to calculate even when your system S isn’t “big.” It will still give

you the exact average energy hEi when your system is in equilibrium

with a bigger environment at some temperature. What’s special about

the thermodynamic limit is that you no longer have to imagine the heat

bath is there in order to interpret your results, because any “average

quantity” will basically just be an actual, sharply defined, “quantity.” In

short,

Z(β) = Ω(U)e

−βU

(thermodynamic limit) (66)

It’s worth mentioning that the other contributions to Z(β) will also be

absolute huge; they just won’t be as stupendously huge as the term due

to U.

Okay, enough adulation for the partition function. Let’s do some-

thing with it again. Using the above equation there is a very easy way

to figure out what S

S

(U) is in terms of Z(β).

S

S

(U) = k log Ω

S

(U)

= k log

Ze

βU

(thermodynamic limit)

= k log Z + kβU

= k

1 − β

∂

∂β

log Z