Too Much Data: Prices and Inefficiencies in Data Markets

∗

Daron Acemoglu

†

Ali Makhdoumi

‡

Azarakhsh Malekian

§

Asu Ozdaglar

¶

Abstract

When a user shares her data with an online platform, she typically reveals relevant information

about other users. We model a data market in the presence of this type of externality in a setup

where one or multiple platforms estimate a user’s type with data they acquire from all users and

(some) users value their privacy. We demonstrate that the data externalities depress the price of

data because once a user’s information is leaked by others, she has less reason to protect her data

and privacy. These depressed prices lead to excessive data sharing. We characterize conditions

under which shutting down data markets improves (utilitarian) welfare. Competition between

platforms does not redress the problem of excessively low price for data and too much data shar-

ing, and may further reduce welfare. We propose a scheme based on mediated data-sharing that

improves efficiency.

Keywords: data, informational externalities, online markets, privacy.

JEL Classification: D62, L86, D83.

∗

We are grateful to Alessandro Bonatti and Hal Varian for useful conversations and comments. We gratefully ac-

knowledge financial support from Google, Microsoft, the National Science Foundation, and the Toulouse Network on

Information Technology.

†

Department of Economics, Massachusetts Institute of Technology, Cambridge, MA, 02139 . [email protected]

‡

Fuqua School of Business, Duke University, Durham, NC, 27708 [email protected]

§

¶

Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, Cambridge,

MA, 02139 [email protected]

1 Introduction

The data of billions of individuals are currently being utilized for personalized advertising or

other online services.

1

The use and transaction of individual data are set to grow exponentially

in the coming years with more extensive data collection from new online apps and integrated

technologies such as the Internet of Things and with the more widespread applications of artificial

intelligence (AI) and machine learning techniques. Most economic analyses emphasize benefits

from the use and sharing of data because this permits better customization, better information,

and more input into AI applications. It is often claimed that because data enable a better allocation

of resources and more or higher quality innovation, the market mechanism generates too little data

sharing (e.g., Varian [2009], Jones and Tonetti [2018], Veldkamp et al. [2019], and Veldkamp [2019]).

Economists have recognized that consumers might have privacy concerns (e.g., Stigler [1980],

Posner [1981], and Varian [2009]), but have often argued that data markets could appropriately

balance privacy concerns and the social benefits of data (e.g., Laudon [1996] and Posner and Weyl

[2018]). In any case, the willingness of the majority of users to allow their data to be used for no

or very little direct benefits is argued to be evidence that most users place only a small value on

privacy.

2

This paper, in contrast, argues that there are forces that will make individual-level data under-

priced and the market economy generate too much data. The reason is simple: when an individual

shares her data, she compromises not only her own privacy but also the privacy of other individ-

uals whose information is correlated with hers. This negative externality tends to create excessive

data sharing. Moreover, when there is excessive data sharing, each individual will overlook her

privacy concerns and part with her own information because others’ sharing decisions will have

already revealed much about her.

Some of the issues we emphasize are highlighted by the Cambridge Analytica scandal. The

company acquired the private information of millions of individuals from data shared by 270,000

Facebook users who voluntarily downloaded an app for mapping their personality traits, called

“This is your digital life”. The app accessed users’ news feed, timeline, posts and messages, and

revealed information about other Facebook users. Cambridge Analytica was ultimately able to infer

valuable information about more than 50 million Facebook users, which it deployed for designing

personalized political messages and advertising in the Brexit referendum and 2016 US presidential

election.

3

Though some of the circumstances of this scandal are unique, the issues are general. For

example, when an individual shares information about his own behavior, habits and preferences,

this contains considerable information about the behavior, habits and preferences of not only his

friends but also other people with similar characteristics (e.g., the routines and choices of a highly-

educated gay from Central America in his early 20s in Somerville, Massachusetts is informative

1

Just Facebook has almost 2.5 billion monthly (active) individual users.

2

Consumers often report valuing privacy (e.g., Westin [1968]; Goldfarb and Tucker [2012]), but do not take much

action to protect their privacy (e.g., “Why your inbox is crammed full of privacy policies”, WIRED, May 24, 2018 and

Athey et al. [2017]).

3

See New York Times, March, 19 2018 and New York Times, March, 13, 2018, and The Guardian, April, 13, 2018

1

about others with the same profile and residing in the same area).

The following example illustrates the nature of the problem, introduces some of our key con-

cepts, and clarifies why there will be excessive data sharing and very little willingness to protect

privacy on the part of users.

Consider a platform with two users, i = 1, 2. Each user owns her own personal data, which

we represent with a random variable X

i

(from the viewpoint of the platform). The relevant data

of the two users are related, which we capture by assuming that their random variables are jointly

normally distributed with mean zero and correlation coefficient ρ. The platform can acquire or

buy the data of a user in order to better estimate her preferences or actions. Its objective is to

minimize the mean square error of its estimates of user types, or maximize the amount of leaked

information about them. Suppose that the valuation (in monetary terms) of the platform for the

users’ leaked information is one, while the value that the first user attaches to her privacy, again

in terms of leaked information about her, is 1/2 and for the second user it is v > 0. We also assume

that the platform makes take-it-or-leave-it offers to the users to purchase their data. In the absence

of any restrictions on data markets or transaction costs, the first user will always sell her data

(because her valuation of privacy, 1/2, is less than the value of information to the platform, 1).

But given the correlation between the types of the two users, this implies that the platform will

already have a fairly good estimate of the second user’s information. Suppose, for illustration,

that ρ ≈ 1. In this case, the platform will know almost everything relevant about user 2 from user

1’s data, and this undermines the willingness of user 2 to protect her data. In fact, since user 1 is

revealing almost everything about her, she would be willing to sell her own data for a very low

price (approximately 0 given ρ ≈ 1). But once the second user is selling her data, this also reveals

the first user’s data, so the first user can only charge a very low price for her data. Therefore in this

simple example, the platform will be able to acquire both users’ data at approximately zero price.

Critically, however, this price does not reflect the users’ valuation of privacy. When v ≤ 1, the

equilibrium is efficient because data are socially beneficial in this case (even if data externalities

change the distribution of economic surplus to the advantage of the platform). However, it can be

arbitrarily inefficient when v is sufficiently high. This is because the first user, by selling her data,

is creating a negative externality on the second user.

This simple example captures many of the economic ideas of the paper. Our formal analysis

generalizes these insights by considering a community of users whose information are correlated

in an arbitrary fashion and who have heterogeneous valuations of privacy. Finally, we analyze

this model both under a monopoly platform and under competition between platforms trying to

simultaneously attract users and acquire their data.

Our main results correspond to generalizations of the insights summarized by the preceding

example. First, we introduce our general framework and characterize the first-best allocation

which maximizes the sum of surplus of users and platforms. The first best typically involves

considerable data transactions, but those individuals creating significant (negative) externalities

on others should not share their data. Second, we establish the existence of an equilibrium and

2

characterize the prices at which data will be transacted. This characterization clarifies how the

market price of data for a user and the distribution of surplus depend on information leaked by

other users. Third and more importantly, we provide conditions under which the equilibrium in

the data market is inefficient as well as conditions for simple restrictions on markets to improve

welfare. At the root of these inefficiencies are the economic forces already highlighted by our

example: inefficiencies arise when a subset of users are willing to part with their data, which are

informative about other users whose value of privacy is high. We show that these insights extend

to environments with competing platforms and incomplete information as well.

We further investigate various policy approaches to data markets. Person-specific taxes on

data transactions can restore the first best, but are impractical. We show in addition how uniform

taxation on all data transactions might, under some conditions, improve welfare. Finally, we

propose a new regulation scheme where data transactions are mediated in a way that reduces their

correlation with the data of other users, thus minimizing leaked information about others. We

additionally develop a procedure for implementing this scheme based on “de-correlation”, meaning

transforming users’ data so that their correlation with others’ data and types is removed.

4

Our paper relates to the literature on privacy and its legal and economic aspects. The classic

definition of privacy, proposed by justices Warren and Brandeis in 1890, is the protection of some-

one’s personal space and the right to be let alone (Warren and Brandeis [1890]). Relatedly, and

more relevant to our focus, Westin [1968] defines it as the control over and safeguarding of per-

sonal information, and this perspective has been explored from various angles in recent work (e.g.,

Pasquale [2015], Tirole [2019], Zuboff [2019]). More closely related to our paper are MacCarthy

[2010], Fairfield and Engel [2015], Choi et al. [2019], and Bergemann et al. [2019]. MacCarthy

[2010] and Fairfield and Engel [2015] are the first contributions we are aware of that emphasize

externalities in data sharing, which play a central role in our analysis. More recently, Choi et al.

[2019] develop a reduced-form model with a related informational externality and a number of re-

sults similar to our excessive information sharing finding. There are several important differences

between this paper and ours, however. First, Choi et al. [2019] do not model how data sharing

creates an externality and simply assume that consumer welfare depends negatively on the num-

ber of other consumers on an online platform. In contrast, much of our analysis is devoted to the

study of how the correlation structure across different users jointly determines sharing decisions

and the amount of leaked information. Second, they assume that consumers are identical, while

our above example already illustrates that heterogeneity in privacy concerns plays a critical role

in the inefficiencies in data markets. Indeed, our analysis highlights that there are only limited

inefficiencies when all users are homogeneous (specifically, the equilibrium is efficient in this case

when they have low or sufficiently high value of privacy). Third, their paper does not analyze

the case with competing platforms. Finally, Bergemann et al. [2019] also study an environment

with data externalities. Though there are some parallels between the two papers, their work is

4

This de-correlation procedure is different from anonymization of data because it does not hide information about

the user sharing her data but about others who are correlated with this user.

3

different from and largely complementary to ours. In particular, they analyze an economy with

symmetric users where there is a monopolist platform and data are used by this monopolist or

other downstream firms (such as advertisers) for price discrimination. They focus on the impli-

cations for market prices, profits, and efficiency of the structure of the downstream market and

whether data are collected in an anonymized or non-anonymized form.

Our paper also relates to the growing literature on information markets. One branch of this

literature focuses on the use of personal data for improved allocation of online resources (e.g.,

Bergemann and Bonatti [2015], Goldfarb and Tucker [2011], and Montes et al. [2018]). Another

branch investigates how information can be monetized either by dynamic sales or optimal mech-

anisms. For example, Anton and Yao [2002], Babaioff et al. [2012], Es

˝

o and Szentes [2007], Horner

and Skrzypacz [2016], Bergemann et al. [2018], and Eliaz et al. [2019] consider either static or dy-

namic mechanisms for selling data, Ghosh and Roth [2015] uses differential privacy framework

of Dwork and Roth [2014] and study mechanism design with privacy constraints, and Admati

and Pfleiderer [1986] and Begenau et al. [2018] study markets for financial data. A third branch

focuses on optimal collection and acquisition of information, for example, Agarwal et al. [2018],

Chen and Zheng [2018], and Chen et al. [2018]. Lastly, a number of papers investigate the ques-

tion of whether information harms consumers, either because users are unaware of the data being

collected about them (Taylor [2004]) or because of price discrimination related reasons (Acquisti

and Varian [2005]). See Acquisti et al. [2016], Bergemann and Bonatti [2019], and Agrawal et al.

[2018] for excellent surveys of different aspects of this literature.

The rest of the paper proceeds as follows. Section 2 presents our model, focusing on the case

with a single platform for simplicity. Section 3 provides our main results, in particular, charac-

terizing the structure of equilibria in data markets and highlighting their inefficiency due to data

externalities. It also shows how shutting down data markets may improve welfare. Section 4

extends these results to a setting with competing platforms, while Section 5 presents analogous

results when the value of privacy of each user is their private information. Section 6 shows that

two types of policies, taxes and third-party-mediated information sharing, can improve welfare.

Section 7 concludes, while Appendix A presents the proofs of some of the results stated in the text

and the online Appendix B contains the remaining proofs and additional results.

2 Model

In this section we introduce our model, focusing first on the case with a single platform. Compe-

tition between platforms is analyzed in Section 4.

2.1 Information and Payoffs

We consider n users represented by the set V = {1, . . . , n}. Each user i ∈ V has a type denoted by

x

i

which is a realization of a random variable X

i

. We assume that the vector of random variables

X = (X

1

, . . . , X

n

) has a joint normal distribution N (0, Σ), where Σ ∈ R

n×n

is the covariance

4

matrix of X. Let Σ

ij

designate the (i, j)-th entry of Σ and Σ

ii

= σ

2

i

> 0 denote the variance of

individual i’s type.

Each user has some personal data, S

i

, which is informative about her type (for example, based

on her past behavior, preferences, or contacts). We suppose that S

i

= X

i

+ Z

i

where Z

i

is an

independent random variable with standard normal distribution, i.e., Z

i

∼ N (0, 1).

For any user joining the platform, the platform can derive additional revenue if it can predict

her type. This might be because of improved personalized services, targeted advertising, or price

discrimination for some services sold on the platform. Since the exact source of revenue for the

platform is immaterial for our analysis, we simply assume that the platform’s revenue from each

user is a(n inverse) function of the mean square error of its forecast of the user’s type, minus what

the platform pays to users to acquire their information. Namely, the objective of the platform is to

minimize

X

i∈V

E

h

(ˆx

i

(S) − X

i

)

2

i

− σ

2

i

+ p

i

, (1)

where S is the vector of data the platform acquires, ˆx

i

(S) is the platform’s estimate of the user’s

type given this information, −σ

2

i

is included as a convenient normalization, and p

i

denotes pay-

ments to user i from the platform (we ignore for simplicity any other transaction costs incurred

by the platform and discuss taxes and regulations in Section 6). This payment to the user may be

a direct one in an explicit data market or it may be an implicit transfer, for example, in the form of

some good or service the platform provides to the user in exchange for her data.

Users value their privacy, which we also model in a reduced-form manner as a function of the

same mean square error.

5

This reflects both pecuniary and nonpecuniary motives, for example,

the fact that a user may receive a greater consumer surplus when the platform knows less about

her or she may have a genuine demand for keeping her preferences, behavior, and information

private. There may also be political and social reasons for privacy, for example, for concealing

dissident activities or behaviors disapproved by some groups. We assume, specifically, that user

i’s value of privacy is v

i

≥ 0, and her payoff is

v

i

E

h

(ˆx

i

(S) − X

i

)

2

i

− σ

2

i

+ p

i

.

This expression and its comparison with (1) clarifies that the platform and users have potentially-

opposing preferences over information about user type. We have again subtracted σ

2

i

as a normal-

ization, which ensures that if the platform acquires no additional information about the user and

makes no payment to her, her payoff is zero.

Critically, users with v

i

< 1 value their privacy less than the valuation that the platform at-

taches to information about them, and thus reducing the mean square error of the estimates of

their types is socially beneficial. In contrast, users with v

i

> 1 value their privacy more, and

5

In this and the next section, we do not model the decision of whether to join the platform. Joining decisions are

introduced in Section 4, where we assume that users receive additional services (unrelated to their personal data) from

platforms encouraging them to join even in the presence of loss of privacy.

5

reducing their mean square error is socially costly. In a world without data externalities (where

data about one user have no relevance to the information about other users), the first group of

users should allow the platform to acquire (buy) their data, while the second group should not. A

simple market mechanism based on prices for data can implement this efficient outcome.

We will see that the situation is very different in the presence of data externalities.

2.2 Leaked Information

A key notion for our analysis is leaked information, which captures the reduction in the mean square

error of the platform’s estimate of the type of a user. When the platform has no information about

user i, its estimate satisfies E

h

(ˆx

i

− X

i

)

2

i

= σ

2

i

. As the platform receives data from this and other

users, its estimate improves and the mean square error declines. The notion of leaked information

captures this reduction in mean square error.

Specifically, let a

i

∈ {0, 1} denote the data sharing action of user i ∈ V with a

i

= 1 correspond-

ing to sharing. Denote the profile of sharing decisions by a = (a

1

, . . . , a

n

) and the decisions of

agents other than i by a

−i

. We also use the notation S

a

to denote the data of all individuals for

whom a

j

= 1, i.e., S

a

= (S

j

: j ∈ V s.t. a

j

= 1). Given a profile of actions a, the leaked information

of (or about) user i ∈ V is the reduction in the mean square error of the best estimator of the type

of user i:

I

i

(a) = σ

2

i

− min

ˆx

i

E

h

(X

i

− ˆx

i

(S

a

))

2

i

.

Notably, because of data externalities, leaked information about user i depends not just on her

decisions but also on the sharing actions taken by all users.

With this notion at hand, we can write the payoff of user i given the price vector p = (p

1

, . . . , p

n

)

as

u

i

(a

i

, a

−i

, p) =

p

i

− v

i

I

i

(a

i

= 1, a

−i

) , a

i

= 1

−v

i

I

i

(a

i

= 0, a

−i

) , a

i

= 0,

where recall that v

i

≥ 0 is user’s value of privacy.

We also express the platform’s payoff more compactly as

U(a, p) =

X

i∈V

I

i

(a) −

X

i∈V: a

i

=1

p

i

. (2)

2.3 Equilibrium Concept

An action profile a = (a

1

, . . . , a

n

) and a price vector p = (p

1

, . . . , p

n

) constitute a pure strategy

equilibrium if both users and the platform maximize their payoffs given other players’ strategies.

More formally, in the next definition we define an equilibrium as a Stackelberg equilibrium in which

the platform chooses the price vector recognizing the user equilibrium that will result following this

choice.

6

Definition 1. Given the price vector p = (p

1

, . . . , p

n

), an action profile a = (a

1

, . . . , a

n

) is user

equilibrium if for all i ∈ V,

a

i

∈ argmax

a∈{0,1}

u

i

(a

i

= a, a

−i

, p).

We denote the set of user equilibria at a given price vector p by A(p). A pair (p

E

, a

E

) of price and

action vectors is a pure strategy Stackelberg equilibrium if a

E

∈ A(p

E

) and there is no profitable

deviation for the platform, i.e.,

U(a

E

, p

E

) ≥ U(a, p), for all p and for all a ∈ A(p).

In what follows, we refer to a pure strategy Stackelberg equilibrium simply as an equilibrium.

3 Analysis

In this section, we first study the first-best information sharing decisions that maximize the sum of

users and platform payoffs and then proceed to characterizing the equilibrium and its efficiency

properties.

3.1 First Best

We define the first best as the data sharing decisions that maximize utilitarian social welfare or

social surplus given by the sum of the payoffs of the platform and users. Social surplus from an

action profile a is

Social surplus(a) = U(a, p) +

X

i∈V

u

i

(a, p) =

X

i∈V

(1 − v

i

)I

i

(a).

Prices do not appear in this expression because they are transfers from the platform to users.

6

The

first-best action profile, a

W

, maximizes this expression. The next proposition characterizes the

first-best action profile.

Proposition 1. The first best involves a

W

i

= 1 if

X

j∈V

(1 − v

j

)

Cov

X

i

, X

j

| a

i

= 0, a

W

−i

2

1 + σ

2

j

− I

j

(a

i

= 0, a

W

−i

)

≥ 0, (3)

and a

W

i

= 0 if the left-hand side of (3) is negative.

The proof of this proposition as well as all other proofs, unless otherwise stated, are presented

in Appendix A.

6

In including the platform’s payoff in social surplus we are assuming that this payoff is not coming from shifting

revenues from some other (perhaps off-line) businesses. If we do not include the payoff of the platform in our welfare

measure, our inefficiency results would hold a fortiori.

7

To understand this result, consider first the case in which there are no data externalities so that

the covariance terms in (3) are zero, except Cov

X

i

, X

i

| a

i

= 0, a

W

−i

= σ

2

i

, so that the left-hand

side is simply σ

4

i

/(1 + σ

2

i

) times 1 − v

i

. This yields a

W

i

= 1 if v

i

≤ 1. The situation is different in

the presence of data externalities, because now the covariance terms are non-zero. In this case, an

individual should optimally share her data only if it does not reveal too much about users with

v

j

> 1.

3.2 Equilibrium Preliminaries

The next lemma characterizes two important properties of the leaked information function I

i

:

{0, 1}

n

→ R.

Lemma 1. 1. Monotonicity: for two action profiles a and a

0

with a ≥ a

0

,

I

i

(a) ≥ I

i

(a

0

), ∀i ∈ {1, . . . , n}.

2. Submodularity: for two action profiles a and a

0

with a

0

−i

≥ a

−i

,

I

i

(a

i

= 1, a

−i

) − I

i

(a

i

= 0, a

−i

) ≥ I

i

(a

i

= 1, a

0

−i

) − I

i

(a

i

= 0, a

0

−i

).

The monotonicity property states that as the set of users who share their information expands,

the leaked information about each user (weakly) increases. This is an intuitive consequence of

the fact that more information always facilitates the estimation problem of the platform and re-

duces the mean square error of its estimates. More important for the rest of our analysis is the

submodularity property, which implies that the marginal increase in the leaked information from

individual i’s sharing decision is decreasing in the information shared by others. This too is intu-

itive and follows from the fact that when others’ actions reveal more information, there is less to

be revealed by the sharing decision of any given individual.

7

Using Lemma 1 we next show that for any price vector p ∈ R

n

, the set A(p) is a (non-empty)

complete lattice.

Lemma 2. For any p, the set A(p) is a complete lattice, and thus has a least and a greatest element.

Lemma 2 implies that the set of user equilibria is always non-empty, but may not be singleton

as we illustrate in the next example.

7

Lemma 1 is established under the assumption of Gaussian signals and with mean square error as the measure of

leaked information the players care about. However, this lemma and our equilibrium existence and inefficiency results

that build on it hold for any structure of signals and any measure of leaked information that ensure the monotonicity

and submodularity properties of Lemma 1. One important example that satisfies these properties is a leaked infor-

mation measure given by the mutual information between a user’s type and the vector of types of users who have

shared their data: I

i

(a) = I(X

i

; (X

j

: a

j

= 1)) (where the mutual information between two random variables X

and Y is defined as I (X ; Y ) = E

X,Y

[− log

P (X )P (Y )

P (X,Y )

]). This measure of leaked information satisfies monotonicity and

submodularity for any distribution of random variables X (see Appendix B for more details).

8

1

2

1

2

1

2

1

2

(2−ρ

2

)

2

2(4−ρ

2

)

(2−ρ

2

)

2

2(4−ρ

2

)

(2−ρ

2

)

2

2(4−ρ

2

)

(2−ρ

2

)

2

2(4−ρ

2

)

a

1

=1a

1

=1

a

1

=1a

1

=1

a

2

=0a

2

=0

a

1

=0a

1

=0

a

2

=1a

2

=1

p

2

p

2

p

1

p

1

a

2

=0a

2

=0

a

1

=0a

1

=0

a

2

=1a

2

=1

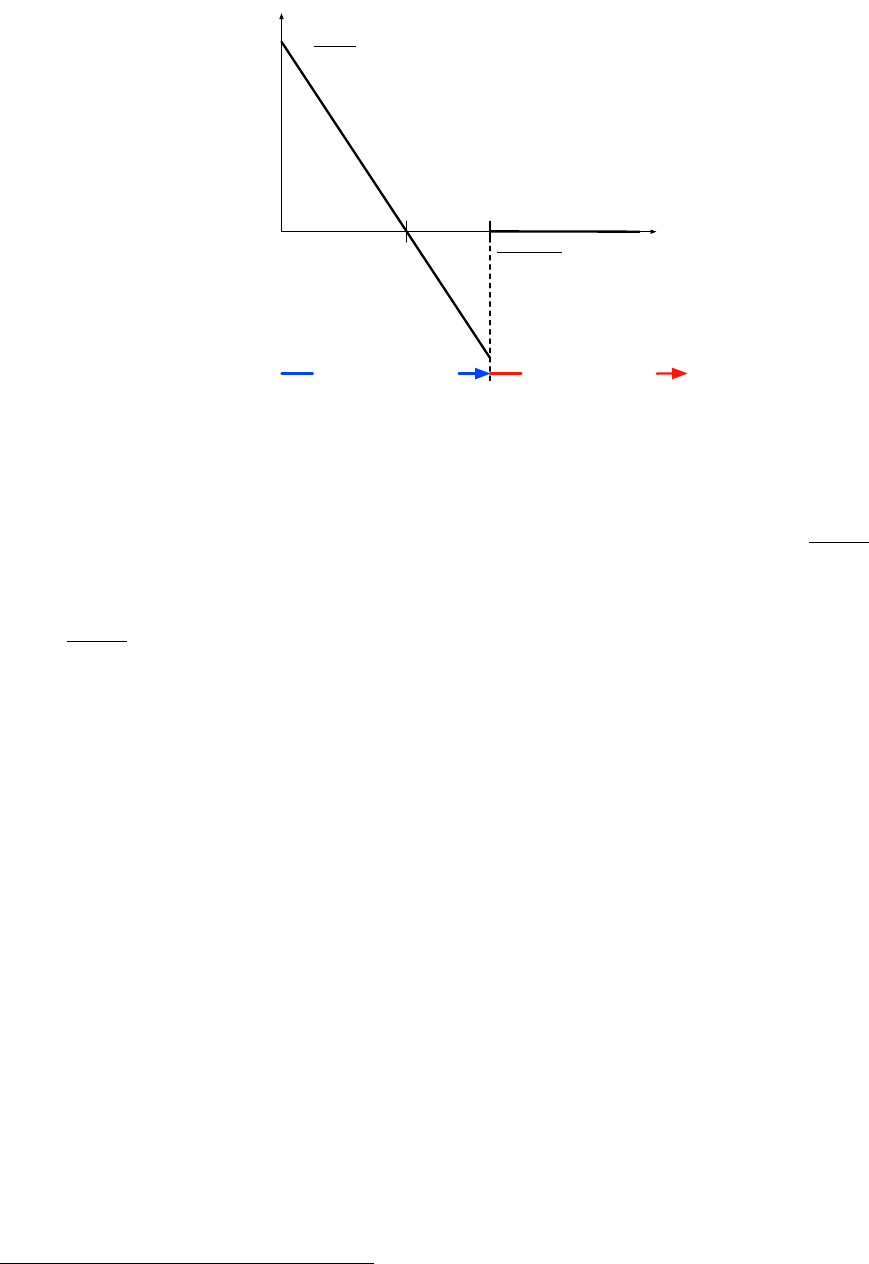

Figure 1: The user equilibrium as a function of price vector (p

1

, p

2

) in the setting of Example 1. For

the prices in the purple area in the center, both a

1

= a

2

= 0 and a

1

= a

2

= 1 are user equilibria.

Example 1. Suppose there are two users 1 and 2 with covariance matrix

Σ =

1 ρ

ρ 1

!

and v

1

= v

2

= v. The set of user equilibria in this case is depicted in Figure 1. When p

1

, p

2

∈

h

(2−ρ

2

)

2

2(4−ρ

2

)

,

1

2

i

, both action profiles a

1

= a

2

= 0 and a

1

= a

2

= 1 are user equilibria. This is a

consequence of the submodularity of the leaked information function (Lemma 1): when user 1

shares her data, she is also revealing a lot about user 2, and making it less costly for her to share

her data. Conversely, when user 1 does not share, this encourages user 2 not to share. Despite this

multiplicity of user equilibria, there exists a unique (Stackelberg) equilibrium for this game given

by a

E

1

= a

E

2

= 1 and p

E

1

= p

E

2

=

(2−ρ

2

)

2

2(4−ρ

2

)

. This uniqueness follows because the platform can choose

the price vector to encourage both users to share.

3.3 Existence of Equilibrium

The next theorem establishes the existence of a (pure strategy) equilibrium.

Theorem 1. An equilibrium always exists. That is, there exist an action profile a

E

and a price vector p

E

such that a

E

∈ A(p

E

), and

U(a

E

, p

E

) ≥ U(a, p), for all p and for all a ∈ A(p). (4)

Note that the equilibrium may not be unique, but if there are multiple equilibria, all of them

yield the same payoff for the platform (since otherwise (4) would not be satisfied for the equilib-

rium with lower payoff for the platform). The following example clarifies this point.

9

Example 2. Suppose there are three users with the same value of privacy and variance: v

i

= 1.18

and σ

2

i

= 1 for i = 1, 2, 3. We let all off-diagonal entries of Σ to be 0.3. Any action profile where

two out of three users share their information is an equilibrium, and thus there are three distinct

equilibria. But it is straightforward to verify that they all yield the same payoff to the platform.

3.4 An Illustrative Example

In this subsection, we provide an illustrative example that shows how some of the key objects in

our analysis are computed and highlights a few of the subtle aspects of the equilibrium. Consider

the same setting as in Example 1 with two users with the same value of privacy, v, and a correlation

coefficient ρ between their information. Given the action profile of users, the joint distribution of

(X

1

, S

1

, S

2

) in this example is

X

1

S

1

S

2

∼ N

0

0

0

,

1 1 ρ

1 1 + γ

2

1

ρ

ρ ρ 1 + γ

2

2

,

where

γ

2

i

=

1 a

i

= 1,

∞ a

i

= 0.

Suppose the platform has received the signals (S

1

, S

2

), then its optimal estimator for X

1

, ˆx

1

(S

1

, S

2

),

is simply the conditional expectation of X

1

given s

1

and s

2

, and its mean square error is equal to

this estimator’s variance, i.e.,

min

ˆx

1

E

h

(ˆx

1

(S

1

, S

2

) − X

1

)

2

i

=

γ

2

1

(1 + γ

2

2

− ρ

2

)

(1 + γ

2

1

)(1 + γ

2

2

) − ρ

2

.

Similarly, the mean square error of the platform’s best estimator for X

2

is

min

ˆx

2

E

h

(ˆx

2

(S

1

, S

2

) − X

2

)

2

i

=

γ

2

2

(1 + γ

2

1

− ρ

2

)

(1 + γ

2

1

)(1 + γ

2

2

) − ρ

2

.

We first show that the total payment from the platform to users is non-monotone in the number

of users sharing their information. When the platform induces both users to share (a

1

= a

2

= 1),

it makes a total payment of v

(2−ρ

2

)

2

4−ρ

2

. In contrast, when it only induces the first user to share

(a

1

= 1, a

2

= 0), this will cost

v

2

. Therefore, when ρ

2

≥

7−

√

17

4

≈ 0.71, the platform pays less to

have both users share their data. Intuitively, this cost-saving for the platform is a consequence of

the submodularity of leaked information (Lemma 1): when both users share, the data of each are

less valuable in view of the information revealed by the other user. This finding reflects one of the

claims made in the Introduction: market prices for data do not reflect the value that users attached

to their privacy and may be depressed because of data externalities.

We next illustrate that equilibrium (social) surplus is non-monotonic in the users’ value of

10

11

4

(2−ρ

2

)

2

4

(2−ρ

2

)

2

vv

a

E

1

= a

E

2

=1a

E

1

= a

E

2

=1 a

E

1

= a

E

2

=0a

E

1

= a

E

2

=0

Social surplusSocial surplus

4

4−ρ

2

4

4−ρ

2

Figure 2: Equilibrium and social surplus as a function of the value of privacy v for a setting with

two users with σ

2

1

= σ

2

2

= 1, Σ

12

= ρ, and v

1

= v

2

= v.

privacy. Equilibrium surplus is depicted in Figure 2. For values of v larger than

4

(2−ρ

2

)

2

, users

do not share their data and equilibrium surplus is zero. When v is smaller than 1, users share

their data and equilibrium surplus is positive. For intermediate values of v, in particular for

v ∈ [1,

4

(2−ρ

2

)

2

], the platform chooses a price vector that induces both users to share their data, but

in this case, the social surplus is negative. The intuition is related to the point already emphasized

in the previous paragraph: when both users share their data, the externalities depress the market

prices for data and this makes it profitable for the platform to acquire the users’ data even though

v > 1. More explicitly, when user 2 shares her data, this reveals sufficient information about user

1 that she becomes willing to accept a relatively low price for sharing her data, and this maintains

an equilibrium with low prices for data even though both users attach a relatively high value to

their privacy.

3.5 Equilibrium Prices

In this subsection, we characterize the equilibrium price vector. For any action profile a ∈ {0, 1}

n

,

let p

a

denote the least (element-wise minimum) equilibrium price vector that sustains an action

profile a in a user equilibrium. More specifically, p

a

is defined such that:

8

p

a

≤ p, for all p such that a ∈ A(p).

Profit maximization by the platform implies that equilibrium prices must satisfy this property —

since otherwise the platform could reduce prices and still implement the same action profile. We

therefore refer to p

a

as “equilibrium price vector” or simply as “equilibrium prices” (with the

8

Prices for users not sharing their data are not well-defined.

11

understanding that these would be the equilibrium prices when the platform chooses to induce

action profile a).

The next theorem computes this price vector (and shows that it exists).

Theorem 2. For any action profile a ∈ {0, 1}

n

, we have

I

i

(a

i

= 1, a

−i

) = I

i

(a

i

= 0, a

−i

) +

σ

2

i

− I

i

(a

i

= 0, a

−i

)

2

(σ

2

i

+ 1) − I

i

(a

i

= 0, a

−i

)

, (5)

and

I

i

(a

i

= 0, a

−i

) = d

T

i

(I + D

i

)

−1

d

i

, for all a

i

= 1,

where D

i

is the matrix obtained by removing row and column i from matrix Σ as well as all rows and

columns j for which a

j

= 0, and d

i

is (Σ

ij

: j s.t. a

j

= 1). The equilibrium price that sustains action

profile a is

p

a

i

=

v

i

(

σ

2

i

−I

i

(a

i

=0,a

−i

)

)

2

(σ

2

i

+1)−I

i

(a

i

=0,a

−i

)

, a

i

= 1,

0, a

i

= 0.

The first part of Theorem 2 provides a decomposition of leaked information about user i in

terms of leaked information about her when she does not share her data. In particular, the first

term on the right-hand side of the equation (5), I

i

(a

i

= 0, a

−i

), is her leaked information resulting

from the data sharing of other users and thus represents the data externality. The second term

is the additional leakage when user i shares her data. The second part of Theorem 2 states that

the equilibrium price offered to any user i who shares her information must make her indifferent

between sharing and not sharing. This is because prices are determined by the platform’s offers.

This is also why the equilibrium price, p

a

i

, is equal to the value of privacy, v

i

, multiplied by the

second term in (5), which is the additional leakage of information and hence the loss of privacy

resulting from the user’s own data sharing.

The following is an immediate corollary of Theorem 2.

Corollary 1. For any user i, the equilibrium price p

(a

i

=1,a

−i

)

i

(that induces a

i

= 1 for any action profile

a

−i

∈ {0, 1}

n−1

) is increasing in σ

2

i

and decreasing in the data externality captured by I

i

(a

i

= 0, a

−i

).

Moreover, leaked information I

i

(a

i

= 1, a

−i

) is increasing in σ

2

i

and in the data externality I

i

(a

i

= 0, a

−i

).

The first part of Corollary 1 shows that a higher variance of user’s type, σ

2

i

, increases the

equilibrium price. Intuitively, a higher variance makes the user’s type more difficult to predict

and thus her own information more valuable. This also explains why the price is decreasing in the

data externality — represented by information leaked by others, I

i

(a

i

= 0, a

−i

). The last part of

Corollary 1 shows that a higher variance of individual type, as well as a greater data externality,

increase the overall leakage of information about the user.

The next proposition establishes that greater correlation between users’ data, and thus greater

data externality, reduces equilibrium prices.

12

Proposition 2. For any action profile a ∈ {0, 1}

n

and any i ∈ V, the price p

a

i

is nonincreasing in the

absolute value of the covariance between any pair of users given the action profile a.

The equilibrium price for i is the difference between the information that platform has about

i with and without i’s own data (multiplied by her value of privacy v

i

). Suppose first that the

correlation between i’s data and some other user j’s data increases. Mathematically, this means

that |Cov(X

i

, X

j

| a)| increases, and user j’s information about i becomes more accurate. There-

fore, provided that a

j

= 1, this reduces the value of user i’s information for predicting her type

and thus depresses her benefits from protecting her data. The same result also applies when the

correlation between two other users increases, that is, when |Cov(X

j

, X

k

| a)| increases. In this

case, the platform obtains more accurate information about users j and k, and indirectly their data

become more informative about user i, leading to the same conclusion.

Proposition 2 establishes that equilibrium prices are nonincreasing in the correlation between

users’ data. Yet the number of users who share their data in equilibrium is non-monotone in this

correlation as we show in the next example.

Example 3. Consider a setting with three users with Σ

12

= Σ

13

= 1/2, Σ

23

= ρ, v

i

= 3/2, and

σ

2

i

= 1 for all users. We have the following cases.

1. ρ = 0: the equilibrium decisions are a

1

= 1, and a

2

= a

3

= 0, the total payment is 0.75, and

the total information leakage is

P

3

i=1

I

i

(a

E

) = 0.75.

2. ρ = 1/2: the equilibrium decisions are a

1

= a

2

= a

3

= 1, the total payment is 1.6, and the

total information leakage is

P

3

i=1

I

i

(a

E

) = 1.66.

3. ρ = 1: the equilibrium decisions are a

1

= 0 and a

2

= a

3

= 1, the total payment is 0.5, and

the total information leakage is

P

3

i=1

I

i

(a

E

) = 1.5.

Therefore, as we increase the correlation among users, the set of users that share information in

equilibrium may increase (from case 1 to case 2) or decrease (from case 2 to case 3). The intuition

is that as more information is leaked about a user (in this case about user 1), the value of her data

both to the platform and to herself declines, and the platform may no longer find it worthwhile to

compensate her for selling her data.

The next proposition, however, shows that equilibrium prices are decreasing in the set of users

sharing their data.

Proposition 3. For two action profiles a, a

0

with a

0

≥ a, we have p

a

0

i

≤ p

a

i

for all i ∈ V for which a

i

= 1.

Proposition 3 follows from Theorem 2 and Lemma 1. In particular, using Theorem 2 the equi-

librium price for user i is her additional loss of privacy (increase in the information leakage multi-

plied by v

i

) if she shares her data. From the submodularity of information leakage (Lemma 1) the

additional information the user leaks about herself decreases when more people share their data.

9

9

The proposition covers the prices for users sharing their data, since prices for those not sharing are not well-defined.

13

3.6 Inefficiency

This subsection presents one of our main results, documenting the extent of inefficiency in data

markets.

First note that all users with value of privacy less than 1 will always share their data in equi-

librium. For future reference, we state this straightforward result as a lemma.

Lemma 3. All users with value of privacy v

i

≤ 1 share their data in equilibrium.

10

Motivated by this lemma, we partition users into two sets, those with value of privacy below

1 (“low-value users”) and those above (“high-value users”):

V

(l)

= {i ∈ V : v

i

≤ 1} and V

(h)

= {i ∈ V : v

i

> 1}.

We also denote by v

(h)

and v

(l)

the vectors of valuations of privacy for high-value and low-value

users, respectively. Lemma 3 then implies that for all i ∈ V

(l)

we have a

E

i

= 1.

The next theorem provides conditions for efficiency and inefficiency. More precisely, we show

that if every high-value user is uncorrelated with all other users, then equilibrium is efficient.

Otherwise, if either a high-value user is correlated with a low-value user or two high-value users

are correlated, there exists a set of valuations (consistent with the set of high and low-value users)

such that any equilibrium is inefficient.

Theorem 3. 1. Suppose every high-value user is uncorrelated with all other users. Then the equilib-

rium is efficient.

2. Suppose at least one high-value user is correlated (has a non-zero correlation coefficient) with a low-

value user. Then there exists

¯

v ∈ R

|V

(h)

|

such that for v

(h)

≥

¯

v the equilibrium is inefficient.

3. Suppose every high-value user is uncorrelated with all low-value users and at least one high-value

user is correlated with another high-value user. Let

˜

V

(h)

⊆ V

(h)

be the subset of high-value users

correlated with at least one other high-value user. Then for each i ∈

˜

V

(h)

there exists ¯v

i

> 0 such that

if for any i ∈

˜

V

(h)

v

i

< ¯v

i

, the equilibrium is inefficient

Theorem 3 clarifies the source of inefficiency in our model. If high-value users are not cor-

related with others, the equilibrium is efficient. In this case, there may still be data externalities

among low-value users and these may affect market prices (and the distribution of economic gains

between the users and the platform). But they do not create a loss of privacy for users who prefer

not to share their data.

10

The only subtlety here is about users with v

i

= 1. If these users’ information is correlated with others who are

already sharing, their equilibrium price will be strictly less than 1, and this will make it strictly beneficial for the

platform to purchase their data. If they are correlated with others who are not sharing, then the platform would still

like to purchase these data because of the additional reduction in the mean square error of its estimates of others’

types they enable. When such an individual is uncorrelated with anybody else, then the platform would be indifferent

between purchasing her data and not. In this case, for simplicity of notation, we suppose that it still purchases.

14

However, the second part of the theorem shows that if high-value users are correlated with

low-value users, the equilibrium is typically inefficient. The additional condition v

(h)

≥

¯

v is not

a restrictive one as highlighted in Example 4 below and rules out cases in which high-value users

suffer only little loss of privacy but generate socially valuable information about low-value users.

In general, the inefficiency identified in this part of the theorem can take one of two forms: either

high-value users do not share their data, but because of information leaked about them, they suffer

a loss of privacy. Or given the amount of leaked information about them, high-value users decide

to share themselves (despite their initial reluctance to do so).

Finally, the third part of the theorem covers the remaining case, where high-value users are

uncorrelated with low-value users but are correlated among themselves. The equilibrium is again

inefficient, because the platform can induce some of them to share their data (even though indi-

vidually each would prefer not to). This is because when a subset of them share, this compromises

the privacy of others, depresses data prices, and may incentivize them to share too (in turn further

depressing data prices). This inefficiency applies when some high-value users have intermediate

values of privacy (i.e., v

i

∈ (1, ¯v

i

)), since those with sufficiently high value of privacy cannot be

induced to share their data.

Overall, this theorem highlights that inefficiency in data markets originates from the combina-

tion of sufficiently high value attached to privacy by some users and their correlation with other

users. It therefore emphasizes that inefficiency in our model is tightly linked to data externalities.

3.7 Are Data Markets Beneficial?

Theorem 3 focuses on the comparison of the market equilibrium to the first best. This is a tough

comparison for the market because in the first best some users share their data and benefit from

market transactions, while others do not share. A lower bar for data markets is whether they

achieve positive social surplus so that any inefficiencies they create are (partially) compensated

by benefits for other agents. We next show that this is not necessarily the case and provide a

sufficient condition for the equilibrium (social) surplus to be negative — so that shutting down

data markets all together would improve social surplus (and thus utilitarian welfare). Let us also

introduce the following notation: for any action profile a ∈ {0, 1}

n

, we let I

i

(T ) denote the leaked

information about user i where T = {i ∈ V : a

i

= 1}.

Proposition 4. We have

Social surplus(a

E

) ≤

X

i∈V

(l)

(1 − v

i

)I

i

(V) −

X

i∈V

(h)

(v

i

− 1)I

i

(V

(l)

).

This implies that if

X

i∈V

(h)

(v

i

− 1)I

i

(V

(l)

) >

X

i∈V

(l)

(1 − v

i

)I

i

(V), (6)

then the equilibrium surplus is negative and utilitarian welfare improves if data markets are shut down.

15

This proposition follows immediately from Lemma 3. The first term is an upper bound on

the gain in social surplus from the sharing decisions of low-value users (even if these gains do

not necessarily accrue to the users themselves and are mainly captured by the platform). This

expression is an upper bound because we are evaluating this term under the assumption that all

users share their data, thus maximizing the amount of socially beneficial information about low-

value users. The second term is a lower bound on the loss of privacy from high-value users. It is a

lower bound because the loss of privacy is evaluated for the minimal set of agents, the low-value

ones, who always share their data (in equilibrium a superset of V

(l)

will share their data).

We also add that leaked information in this proposition is only a function of the matrix Σ as

shown in Theorem 2, so the right-hand side is in terms of model parameters and does not depend

on equilibrium objects.

The next proposition provides a sufficient condition in terms of values of privacy and corre-

lations between data that ensures condition (6) and implies that the equilibrium necessarily has

negative social surplus.

Proposition 5. Suppose

X

i∈V

(h)

(v

i

− 1)

P

j∈V

(l)

Σ

2

ij

||Σ

(l)

||

1

+ 1

!

>

X

i∈V

(l)

(1 − v

i

),

where ||Σ

(l)

||

1

denotes the 1-norm of the submatrix of Σ which only includes the rows and columns corre-

sponding to low-value users. Then the equilibrium surplus is negative.

11

Proposition 5 provides explicit sufficient conditions in terms of the values of privacy and the

correlation between high and low-value users for negative equilibrium surplus.

Example 4. We consider a setting with two communities, each of size 10. Suppose that all users in

community 1 are low-value and have a value of privacy equal to 0.9, while all users in community

2 are high-value (with v

h

> 1). We also take the variances of all user data to be 1, the correlation

between any two users who belong to the same community to be 1/20, and the correlation be-

tween any two users who belong to different communities to be ρ. Figure 3 depicts equilibrium

surplus as a function of v

h

and ρ. The curve in the figure represents the combinations of these two

variables for which the social surplus is equal to zero. Moving in the northeast direction reduces

equilibrium surplus and hence the shaded area has negative surplus. Consequently, in this part of

the parameter space, shutting down data markets improves utilitarian social welfare.

Two points are worth noting. First, relatively small values of the correlation coefficient ρ are

sufficient for social surplus to be negative. Second, when v

h

is very close to 1, the social surplus is

always positive because the negative surplus from high-value users is compensated by the social

benefits their data sharing creates for low-value users.

11

1-norm of a matrix A is defined as ||A||

1

= max

i

P

n

j=1

|A

ij

|.

16

Value of privacy for high-value users: v

h

12345678910

Cross-community correlation: ρ

0.02

0.04

0.06

0.08

0.1

0.12

0.14

0.16

Figure 3: Shaded area shows the pairs of (ρ, v

h

) with negative equilibrium surplus in the setting

of Example 4.

4 Competition Among Platforms

In this section we generalize the main results from the previous section to a setting in which

multiple platforms compete for (the data of) users. For simplicity we focus on the case in which

there are two platforms. Formally, we are dealing with a three-stage game in which first users

decide which platform to join (if any), then platforms simultaneously offer prices for data, and

then finally all users simultaneously decide whether to share their data. We start by assuming

that data externalities are only among users joining the same platform, but then generalize this to

the case in which the platform learns valuable information about users that are not on its platform

as well. At the end of the section, we also discuss the case in which platforms first set prices for

data in order to attract users.

4.1 Information and Payoffs

For any i ∈ V, we denote by b

i

∈ {0, 1, 2} the joining decision of user i in the first-stage game

where b

i

= 0 means user i does not join, b

i

= 1 means she joins platform 1, and b

i

= 2 stands for

joining platform 2. Let us also define

J

1

= {i ∈ V : b

i

= 1} and J

2

= {i ∈ V : b

i

= 2},

as the sets of users joining the two platforms.

Similar to the monopoly case in the previous section, the payoff of a platform is a function of

leaked information about users and payments to users. So for platform k ∈ {1, 2}, we have

U

(k)

(J

k

, a

J

k

, p

J

k

) =

X

i∈J

k

I

i

(a

J

k

) −

X

i∈J

k

: a

J

k

i

=1

p

J

k

i

, (7)

where a

J

k

∈ {0, 1}

|J

k

|

denotes the sharing decision of users belonging to this platform, and p

J

k

17

denotes the vector of prices the platform offers to users in J

k

.

The payoff of a user has three parts. First, each user receives a valuable service from the

platform it joins. Since we are modeling joining decisions in this section, we will be more explicit

about this “joining value” and assume that it depends on who else joins the platform. We therefore

write this part of the payoff as c

i

(J

b

i

) for user i joining platform b

i

, with the convention that J

0

= ∅,

and also normalize c

i

(J) = 0 for all J 63 i and for all i ∈ V. Second, the user suffers a disutility due

to loss of privacy from leaked information as before, and we again denote the value of privacy

for user i by v

i

. Third, she receives benefits from any payments from the platform in return of the

data she shares. Thus the payoff to user i joining platform k ∈ {1, 2} can be written as

u

i

(J

k

, a

i

, a

J

k

−i

, p

J

k

) =

p

J

k

i

− v

i

I

i

a

i

= 1, a

J

k

−i

+ c

i

(J

k

), a

i

= 1,

−v

i

I

i

a

i

= 0, a

J

k

−i

+ c

i

(J

k

) a

i

= 0.

With our convention, when the user chooses b

i

= 0, this payoff is equal to zero — there is no data

sharing decision, the payment is equal to zero, leaked information is equal to zero, and c

i

(∅) = 0.

The timing of events is as follows.

1. Users simultaneously decide which platform, if any, to join, i.e., b ={b

i

}

i∈V

, which deter-

mines J

1

and J

2

.

2. Given J

1

and J

2

, the two platforms simultaneously offer price vectors p

J

1

and p

J

2

.

3. Given J

1

and J

2

and p

J

1

and p

J

2

, users simultaneously make their data sharing decisions,

a : {0, 1, 2}

n

× R

n

→ {0, 1}.

The assumption that users join platforms before prices is adopted both for simplicity and to

capture the fact that there is currently a limited ability for platforms to attract customers by of-

fering prices for data. We discuss this type of price competition and its implications below. Even

though prices are offered at the second stage, users will anticipate these prices and the implica-

tions they have for leaked information in making their joining decisions.

4.2 Equilibrium Concept

We first observe that from the second stage on, once the set of users joining a platform is deter-

mined, this game is identical to the one we analyzed in the previous section. Hence, the Stackel-

berg equilibrium from the second stage onwards is defined analogously. Then in the first stage,

we define a joining equilibrium, as a profile of joining decisions anticipating the equilibrium from

the second stage onward. More formally:

Definition 2. Given a joining decision b and the corresponding sets of users on the two platforms,

J

1

and J

2

, a pure strategy Stackelberg equilibrium from the second stage onwards is given by price

18

vectors p

J

1

,E

and p

J

2

,E

and action profiles (a

J

1

,E

, a

J

2

,E

) such that a

J

k

,E

∈ A(p

J

k

,E

) and

U

(k)

(J

k

, a

J

k

,E

, p

J

k

,E

) ≥ U

(k)

(J

k

, a

J

k

, p

J

k

), for all p

J

k

and for all a

J

k

∈ A(p

J

k

)

for k ∈ {1, 2}.

Joining decision profile b

E

and the corresponding sets of users on the two platforms, J

E

1

and

J

E

2

, constitute a pure strategy joining equilibrium if no user has a profitable deviation. That is, for

all i ∈ V,

u

i

(J

E

b

i

, a

J

E

b

i

,E

, p

J

E

b

i

,E

) ≥ u

i

(J

E

k

∪ {i}, a

J

E

k

∪{i},E

, p

J

E

k

∪{i},E

) for k 6= b

i

and

u

i

(J

E

b

i

, a

J

E

b

i

,E

, p

J

E

b

i

,E

) ≥ 0, for all i ∈ J

E

b

i

.

Note that the first condition for the joining equilibrium ensures that each user prefers the plat-

form she joins to the other platform, and given our convention that J

0

= ∅, it also implies that

users not joining either platform prefer this to joining one of the two platforms. The second condi-

tion makes sure that a user joining a platform receives nonnegative payoff, since not joining either

platform guarantees zero payoff.

4.3 Equilibrium Existence and Characterization

Since our focus is on situations in which users join online platforms and share their data, we

impose that joining values are sufficiently large.

Assumption 1. For each i ∈ V, we have

1. for all J and J

0

such that i ∈ J and J ⊂ J

0

, we have c

i

(J

0

) > c

i

(J).

2. c

i

({i}) > max

i∈V

v

i

σ

2

i

.

This assumption implies that users receive greater services from a platform when there are

more users on the platform, which captures the network effects in online services and social media.

The fact that this benefit is indexed by i means that users can prefer being on the same platform

with different sets of other users. This assumption also imposes that even when there are no other

users on a platform, the value of the services provided by the platform exceed the cost of loss

of privacy. This second aspect directly yields the next lemma, which simplifies the rest of our

analysis. For the rest of this section, we impose Assumption 1 without explicitly stating it.

Lemma 4. Each user joins one of the two platforms. In other words, b

i

= 1 or 2 for all i ∈ V.

The next theorem is the direct analogue of Theorems 1 and 2 in the previous section and char-

acterizes the Stackelberg equilibrium given joining decisions.

19

Theorem 4. Consider a joining profile b with the corresponding sets of users J

1

and J

2

. Then a pure

strategy Stackelberg equilibrium exists and satisfies

U

(k)

(J

k

, a

J

k

,E

, p

J

k

,E

) ≥ U

(k)

(J

k

, a

J

k

, p

J

k

), for all p

J

k

, a

J

k

∈ A(p

J

k

), and k = 1, 2.

Moreover, for any i ∈ J

k

the equilibrium prices are

p

J

k

,E

i

= v

i

I

i

(a

J

k

,E

) − I

i

(a

i

= 0, a

J

k

,E

−i

)

. (8)

and

u

i

(J

E

k

, a

J

E

k

,E

, p

J

E

k

,E

) = −v

i

I

i

(a

i

= 0, a

J

k

,E

−i

) + c

i

(J

k

). (9)

Although a pure strategy Stackelberg equilibrium exists, a pure strategy joining equilibrium

may not. This is because of data externalities, which make users sometimes go to the platform

where less of their information will be leaked.

12

The next example illustrates this.

Example 5. Suppose that there are three users with

Σ =

4 .05 .06

.05 4 .05

.06 .05 .3

,

and values of privacy are given by

v

1

= 0.01, v

2

= 0.99, v

3

= 1.1.

Also for simplicity, in this example we take c

i

to be a constant function c for all i (meaning that

conditional on joining a platform, all users receive the same benefit). In this setting, user 3 has

the highest value for privacy, but shares her data if she is on the same platform as user 2 (this is

because her data are highly correlated with user 2’s). However, she does not share her data if she

is on the same platform as user 1.

We next list the possible pure strategy joining profiles and show that none of them can be an

equilibrium.

1. J

1

= {1, 2}, J

2

= {3}: with this joining profile, the resulting Stackelberg equilibrium involves

platform 1 buying the data of both users 1 and 2, while platform 2 does not buy user 3’s data.

In particular, from equation (8), the price of user 1’s data can be expressed as v

1

(I

1

(a

1

=

1, a

2

= 1) − I

1

(a

1

= 0, a

2

= 1)), which yields this user a payoff of −v

1

I

1

(a

1

= 0, a

2

= 1) + c

in this candidate equilibrium. This implies that user 1 has a profitable deviation, which is to

switch to platform 2. To see that this deviation is profitable, note that after this deviation, we

12

Note that even though increasing the amount of leaked information about low-value users increases social surplus,

it reduces these users’ payoff because it enables their platform to pay less for their data. This is the reason low-value

users may prefer to have less information leaked about themselves and choose a platform with fewer other low-value

users.

20

have J

1

= {2} and J

2

= {1, 3}, and the resulting Stackelberg equilibrium involves platform

1 buying user 2’s data, while platform 2 buys user 1’s data but not user 3’s data. This gives

user 1 a payoff of c, verifying that the deviation is beneficial for her.

2. J

1

= {2, 3}, J

2

= {1}: with this joining profile, the resulting Stackelberg equilibrium involves

platform 1 buying the data of both users 2 and 3, while platform 2 buys user 1’s data. With

a similar reasoning, user 2’s payoff is −v

2

I

2

(a

2

= 0, a

3

= 1) + c. But in this case user 2

has a profitable deviation. By switching to platform 2, equation (8) implies that she will

be offered a price of v

2

(I

2

(a

2

= 1, a

1

= 1) − I

2

(a

2

= 0, a

1

= 1)) and receive a payoff of

−v

2

I

1

(a

2

= 0, a

1

= 1) + c, which exceeds her candidate equilibrium payoff.

3. J

1

= {1, 3}, J

2

= {2}: in this case, with a similar reasoning, user 3 has a profitable deviation.

In the candidate equilibrium, this user receives a payoff of −v

3

I

3

(a

1

= 1, a

3

= 0) + c, and

if she deviates and switches to platform 2, she receives the greater payoff of −v

3

I

3

(a

2

=

1, a

3

= 0) + c.

4. J

1

= {1, 2, 3}, J

2

= ∅: in this case, user 3 again has a profitable deviation and can increase

her payoff from −v

3

I

3

(a

1

= 1, a

2

= 1, a

3

= 0) + c to c by switching to platform 2. This

establishes that there is no pure strategy equilibrium in this game.

Even though a pure strategy equilibrium may fail to exist, we next show that a mixed strategy

joining equilibrium always exists.

Definition 3. For any user i ∈ V, let B

i

be the set of probability measures over {1, 2} and β

i

∈ B

i

be a mixed strategy. We also let β ∈

Q

i∈V

B

i

be a mixed strategy profile. Then β

E

is a mixed

strategy joining equilibrium if

u

i

(β

E

i

, β

E

−i

, a

E

, p

E

) ≥ u

i

(β

i

, β

E

−i

, a

E

, p

E

), for all i ∈ V and β

i

∈ B

i

,

where u

i

(β

i

, β

−i

, a

E

, p

E

) = E

b

i

∼β

i

,b

−i

∼q

−i

u

i

(b

i

, b

−i

, a

E

, p

E

)

.

Theorem 5. There always exists a mixed strategy joining equilibrium in which all users join each platform

with probability 1/2.

Theorem 5 follows since when all other users are choosing one of the two platforms uniformly

at random (each with probability 1/2), each user is indifferent between the two platforms and can

thus randomize with probability 1/2 herself.

We also note that when the benefit from joining a more crowded platform is sufficiently greater

than the benefit from joining a smaller platform, this may restore the existence of a pure strategy

equilibrium.

13

13

In Appendix B, we also prove that if all users are low-value, then the setup with competing platforms is a potential

game and thus a pure strategy equilibrium exists. Moreover, we show that the social surplus of this equilibrium is

always less than the social surplus under monopoly, because competition between the platforms leads to an inefficiently

fragmented distribution of low-value users as they try to avoid information about them being leaked by other low-value

users.

21

4.4 Inefficiency

The social surplus of strategy profile (β, a, p) is defined analogously to the equilibrium (social)

surplus in the previous section as

Social surplus(β, a) = E

b∼β

X

i∈J

1

(1 − v

i

)I

i

(a

J

1

)) + c

i

(J

1

)

+

X

i∈J

2

(1 − v

i

)I

i

(a

J

2

)) + c

i

(J

2

)

,

where the sets J

1

and J

2

are defined based on random variable b ∼ β.

A natural conjecture is that competition might redress some of the inefficiencies of data mar-

kets identified so far, either by increasing data prices or by allowing high-value users to go to a

platform where less of their information will be leaked. The next example illustrates that this is

not necessarily the case and competition may increase or reduce equilibrium surplus.

Example 6. Consider a setting with V = {1, 2}, σ

2

1

= σ

2

2

= 1, Σ

12

> 0, and v

1

< 1, and take c

i

to

be a constant function c for all i.

• Competition improves equilibrium surplus: Suppose that v

2

> 1 is sufficiently large that in equi-

librium user 2 never shares her data. Under monopoly, equilibrium data sharing decisions

are a

E

1

= 1 and a

E

2

= 0 with prices given in Theorem 2. The equilibrium surplus is

(1 − v

1

)I

1

(a

1

= 1, a

2

= 0) + (1 − v

2

)I

2

(a

1

= 1, a

2

= 0) + 2c.

With competition, equilibrium joining decisions are b

E

1

= 1, b

E

2

= 2 and equilibrium data

sharing decisions are a

J

1

,E

1

= 1 and a

J

2

,E

2

= 0 with prices given in equation (8). The equilib-

rium surplus is therefore

(1 − v

1

)I

1

(a

1

= 1, a

2

= 0) + 2c,

which is strictly greater than the equilibrium surplus under monopoly.

• Competition reduces equilibrium surplus: Suppose that v

2

< 1. Under monopoly, we have

a

E

1

= 1 and a

E

2

= 1 with prices given in Theorem 2. The equilibrium surplus is

(1 − v

1

)I

1

(a

1

= 1, a

2

= 1) + (1 − v

2

)I

2

(a

1

= 1, a

2

= 1) + 2c.

With competition, the equilibrium involves b

E

1

= 1, b

E

2

= 2, a

J

1

,E

1

= 1 and a

J

2

,E

2

= 1 with

prices given in equation (8). The equilibrium surplus is

(1 − v

1

)I

1

(a

1

= 1, a

2

= 0) + (1 − v

2

)I

2

(a

1

= 0, a

2

= 1) + 2c,

which is strictly less than the surplus under monopoly.

The next theorem, which is the analogue of Theorem 3, characterizes the conditions for effi-

ciency and inefficiency in this case.

22

Theorem 6. 1. Suppose every high-value user is uncorrelated with all other users. Then the equilib-

rium is efficient if and only if c